4. Belle II Python Interface¶

The Belle II Software has an extensive interface to the Python 3 scripting

language: All configuration and steering is done via python and in principle

also simple algorithms can be implemented directly in python. All main

functions are implemented in a module called basf2 and most people will just

start their steering file or script with

import basf2

main = basf2.Path()

4.1. Modules and Paths¶

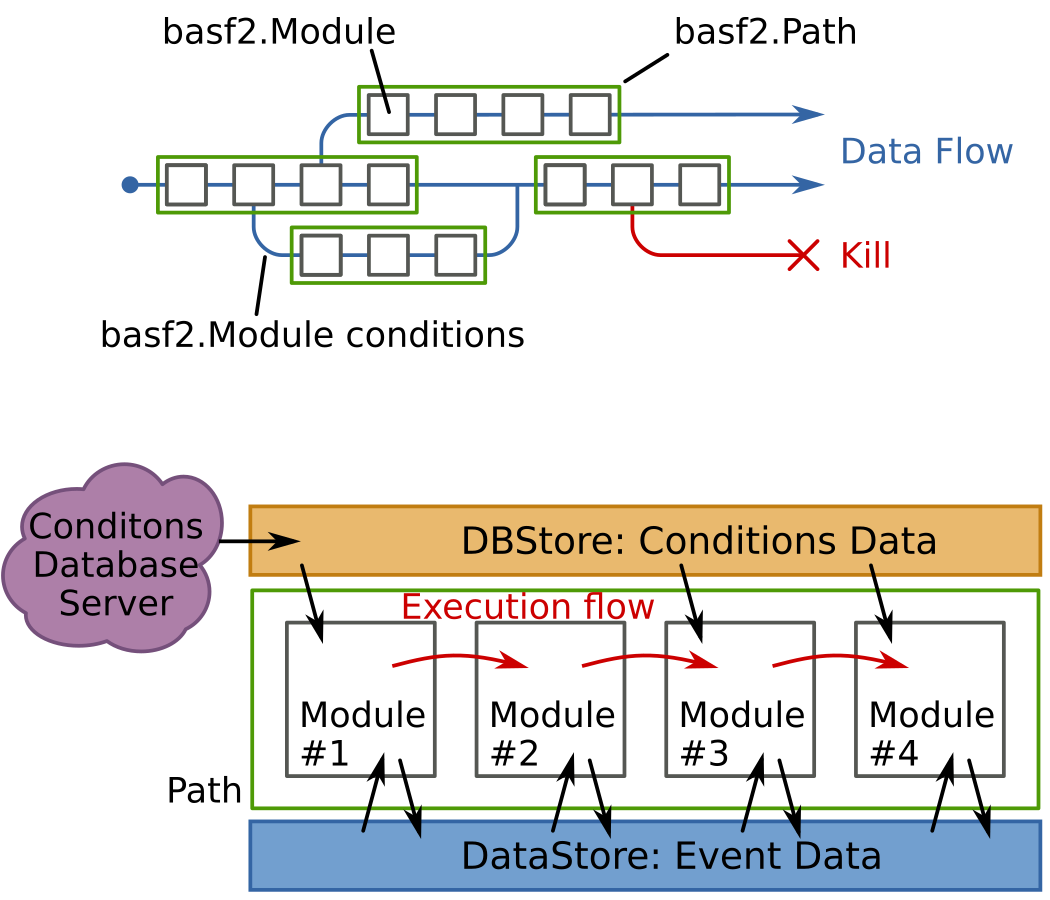

A typical data processing chain consists of a linear arrangement of smaller

processing blocks, called Modules. Their tasks vary

from simple ones like reading data from a file to complex tasks like the full

detector simulation or the tracking.

In basf2 all work is done in modules, which means that even the reading of data

from disk and writing it back is done in modules. They live in a

Path, which corresponds to a container where the

modules are arranged in a strict linear order. The specific selection and

arrangement of modules depend on the user’s current task. When processing data,

the framework executes the modules of a path, starting with the first one and

proceeding with the module next to it. The modules are executed one at a time,

exactly in the order in which they were placed into the path.

Modules can have conditions attached to them to steer the processing flow depending of the outcome of the calculation in each module.

The data, to be processed by the modules, is stored in a common storage, the DataStore. Each module has read and write access to the storage. In addition there’s also non-event data, the so called conditions, which will be loaded from a central conditions database and are available in the DBStore.

Fig. 4.1 Schematic view of the processing flow in the Belle II Software¶

Usually each script needs to create a new path using Path(), add

all required modules in the correct order and finally call process() on

the fully configured path.

Warning

Preparing a Path and adding Modules to it does not

execute anything, it only prepares the computation which is only done when

process is called.

The following functions are all related to the handling of modules and paths:

- basf2.create_path()[source]¶

Creates a new path and returns it. You can also instantiate

basf2.Pathdirectly.

- basf2.register_module(name_or_module, shared_lib_path=None, logLevel=None, debugLevel=None, **kwargs)[source]¶

Register the module ‘name’ and return it (e.g. for adding to a path). This function is intended to instantiate existing modules. To find out which modules exist you can run basf2 -m and to get details about the parameters for each module you can use basf2 -m {modulename}

Parameters can be passed directly to the module as keyword parameters or can be set later using

Module.param>>> module = basf2.register_module('EventInfoSetter', evtNumList=100, logLevel=LogLevel.ERROR) >>> module.param("evtNumList", 100)

- Parameters

name_or_module – The name of the module type, may also be an existing

Moduleinstance for which parameters should be setshared_lib_path (str) – An optional path to a shared library from which the module should be loaded

logLevel (LogLevel) – indicates the minimum severity of log messages to be shown from this module. See

Module.set_log_leveldebugLevel (int) – Number indicating the detail of debug messages, the default level is 100. See

Module.set_debug_levelkwargs – Additional parameters to be passed to the module.

Note

You can also use

Path.add_module()directly, which accepts the same name, logging and module parameter arguments. There is no need to register the module by hand if you will add it to the path in any case.

- basf2.set_module_parameters(path, name=None, type=None, recursive=False, **kwargs)[source]¶

Set the given set of parameters for all

modulesin a path which have the givenname(seeModule.set_name)Usage is similar to

register_module()but this function will not create new modules but just adjust parameters for modules already in aPath>>> set_module_parameters(path, "Geometry", components=["PXD"], logLevel=LogLevel.WARNING)

- Parameters

path (basf2.Path) – The path to search for the modules

name (str) – Then name of the module to set parameters for

type (str) – The type of the module to set parameters for.

recursive (bool) – if True also look in paths connected by conditions or

Path.for_each()kwargs – Named parameters to be set for the module, see

register_module()

- basf2.print_params(module, print_values=True, shared_lib_path=None)[source]¶

This function prints parameter information

- Parameters

module – Print the parameter information of this module

print_values – Set it to True to print the current values of the parameters

shared_lib_path – The path of the shared library from which the module was loaded

- basf2.print_path(path, defaults=False, description=False, indentation=0, title=True)[source]¶

This function prints the modules in the given path and the module parameters. Parameters that are not set by the user are suppressed by default.

- Parameters

defaults – Set it to True to print also the parameters with default values

description – Set to True to print the descriptions of modules and parameters

indentation – an internal parameter to indent the whole output (needed for outputting sub-paths)

title – show the title string or not (defaults to True)

- basf2.process(path, max_event=0)[source]¶

Start processing events using the modules in the given

basf2.Pathobject.Can be called multiple times in one steering file (some restrictions apply: modules need to perform proper cleanup & reinitialisation, if Geometry is involved this might be difficult to achieve.)

When used in a Jupyter notebook this function will automatically print a nice progress bar and display the log messages in an advanced way once the processing is complete.

Note

This also means that in a Jupyter Notebook, modifications to class members or global variables will not be visible after processing is complete as the processing is performed in a subprocess.

To restore the old behavior you can use

basf2.core.process()which will behave exactly identical in Jupyter notebooks as it does in normal python scriptsfrom basf2 import core core.process(path)

- Parameters

path – The path with which the processing starts

max_event – The maximal number of events which will be processed, 0 for no limit

Changed in version release-03-00-00: automatic Jupyter integration

4.1.1. The Module Object¶

Unless you develop your own module in Python you should always instantiate new

modules by calling register_module or Path.add_module.

- class basf2.Module¶

Base class for Modules.

A module is the smallest building block of the framework. A typical event processing chain consists of a Path containing modules. By inheriting from this base class, various types of modules can be created. To use a module, please refer to

Path.add_module(). A list of modules is available by runningbasf2 -morbasf2 -m package, detailed information on parameters is given by e.g.basf2 -m RootInput.The ‘Module Development’ section in the manual provides detailed information on how to create modules, setting parameters, or using return values/conditions: https://confluence.desy.de/display/BI/Software+Basf2manual#Module_Development

- available_params() → list :¶

Return list of all module parameters as

ModuleParamInfoinstances

- beginRun() → None :¶

This function is called by the processing just before a new run of data is processed. Modules can override this method to perform actions which are run dependent

- description() → str :¶

Returns the description of this module.

- endRun() → None :¶

This function is called by the processing just after a new run of data is processed. Modules can override this method to perform actions which are run dependent

- event() → None :¶

This function is called by the processing once for each event.Modules should override this method to perform actions during event processing

- get_all_condition_paths() → list :¶

Return a list of all conditional paths set for this module using

if_value,if_trueorif_false

- get_all_conditions() → list :¶

Return a list of all conditional path expressions set for this module using

if_value,if_trueorif_false

- has_condition() → bool :¶

Return true if a conditional path has been set for this module using

if_value,if_trueorif_false

- has_properties((int)properties) → bool :¶

Allows to check if the module has the given properties out of

ModulePropFlagsset.>>> if module.has_properties(ModulePropFlags.PARALLELPROCESSINGCERTIFIED): >>> ...

- Parameters

properties (int) – bitmask of

ModulePropFlagsto check for.

- if_false(condition_path, after_condition_path=AfterConditionPath.END)¶

Sets a conditional sub path which will be executed after this module if the return value of the module evaluates to False. This is equivalent to calling

if_valuewithexpression=\"<1\"

- if_true(condition_path, after_condition_path=AfterConditionPath.END)¶

Sets a conditional sub path which will be executed after this module if the return value of the module evaluates to True. It is equivalent to calling

if_valuewithexpression=\">=1\"

- if_value(expression, condition_path, after_condition_path=AfterConditionPath.END)¶

Sets a conditional sub path which will be executed after this module if the return value set in the module passes the given

expression.Modules can define a return value (int or bool) using

setReturnValue(), which can be used in the steering file to split the Path based on this value, for example>>> module_with_condition.if_value("<1", another_path)

In case the return value of the

module_with_conditionfor a given event is less than 1, the execution will be diverted intoanother_pathfor this event.You could for example set a special return value if an error occurs, and divert the execution into a path containing

RootOutputif it is found; saving only the data producing/produced by the error.After a conditional path has executed, basf2 will by default stop processing the path for this event. This behaviour can be changed by setting the

after_condition_pathargument.- Parameters

expression (str) – Expression to determine if the conditional path should be executed. This should be one of the comparison operators

<,>,<=,>=,==, or!=followed by a numerical value for the return valuecondition_path (Path) – path to execute in case the expression is fulfilled

after_condition_path (AfterConditionPath) – What to do once the

condition_pathhas been executed.

- initialize() → None :¶

This function is called by the processing just once before processing any data is processed. Modules can override this method to perform some actions at startup once all parameters are set

- name() → str :¶

Returns the name of the module. Can be changed via

set_name(), usetype()for identifying a particular module class.

- package() → str :¶

Returns the package this module belongs to.

- param(key, value=None)¶

This method can be used to set module parameters. There are two ways of calling this function:

With two arguments where the first is the name of the parameter and the second is the value.

>>> module.param("parameterName", "parameterValue")

Or with just one parameter which is a dictionary mapping multiple parameter names to their values

>>> module.param({"parameter1": "value1", "parameter2": True})

- return_value((int)value) → None :¶

Set a return value. Can be used by custom modules to set the return value used to determine if conditional paths are executed

- set_abort_level((int)abort_level) → None :¶

Set the log level which will cause processing to be aborted. Usually processing is only aborted for

FATALmessages but with this function it’s possible to set this to a lower value- Parameters

abort_level (LogLevel) – log level which will cause processing to be aborted.

- set_debug_level((int)debug_level) → None :¶

Set the debug level for this module. Debug messages with a higher level will be suppressed. This function has no visible effect if the log level is not set to

DEBUG- Parameters

debug_level (int) – Maximum debug level for messages to be displayed.

- set_log_info((int)arg2, (int)log_info) → None :¶

Set a

LogInfoconfiguration object for this module to determine how log messages should be formatted

- set_log_level((int)log_level) → None :¶

Set the log level for this module. Messages below that level will be suppressed

- Parameters

log_level (LogLevel) – Minimum level for messages to be displayed

- set_name((str)name) → None :¶

Set custom name, e.g. to distinguish multiple modules of the same type.

>>> path.add_module('EventInfoSetter') >>> ro = path.add_module('RootOutput', branchNames=['EventMetaData']) >>> ro.set_name('RootOutput_metadata_only') >>> print(path) [EventInfoSetter -> RootOutput_metadata_only]

- set_property_flags((int)property_mask) → None :¶

Set module properties in the form of an OR combination of

ModulePropFlags.

- terminate() → None :¶

This function is called by the processing once after all data is processed. Modules can override this method to perform some cleanup at shutdown. The terminate functions of all modules are called in reverse order of the

initializecalls.

- type() → str :¶

Returns the type of the module (i.e. class name minus ‘Module’)

4.1.2. The Path Object¶

- class basf2.Path¶

Implements a path consisting of Module and/or Path objects (arranged in a linear order).

See also

- __contains__()¶

Does this Path contain a module of the given type?

>>> path = basf2.Path() >>> 'RootInput' in path False >>> path.add_module('RootInput') >>> 'RootInput' in path True

- add_independent_path(skim_path, ds_ID='', merge_back_event=None)¶

Add given path at the end of this path and ensure all modules there do not influence the main DataStore. You can thus use modules in skim_path to clean up e.g. the list of particles, save a skimmed uDST file, and continue working with the unmodified DataStore contents outside of skim_path.

- Parameters

ds_ID – can be specified to give a defined ID to the temporary DataStore, otherwise, a random name will be generated.

merge_back_event – is a list of object/array names (of event durability) that will be merged back into the main path.

- add_module(module, logLevel=None, debugLevel=None, **kwargs)¶

Add given module (either object or name) at the end of this path. All unknown arguments are passed as module parameters.

>>> path = create_path() >>> path.add_module('EventInfoSetter', evtNumList=100, logLevel=LogLevel.ERROR) <pybasf2.Module at 0x1e356e0>

>>> path = create_path() >>> eventinfosetter = register_module('EventInfoSetter') >>> path.add_module(eventinfosetter) <pybasf2.Module at 0x2289de8>

- add_path(path)¶

Insert another path at the end of this one. For example,

>>> path.add_module('A') >>> path.add_path(otherPath) >>> path.add_module('B')

would create a path [ A -> [ contents of otherPath ] -> B ].)

- Parameters

path (Path) – path to add to this path

- do_while(path, condition='<1', max_iterations=10000)¶

Similar to

add_path()this will execute a path at the current position but it will repeat execution of this path as long as the return value of the last module in the path fulfills the givencondition.This is useful for event generation with special cuts like inclusive particle generation.

See also

Module.if_valuefor an explanation of the condition expression.- Parameters

path (basf2.Path) – sub path to execute repeatedly

condition (str) – condition on the return value of the last module in

path. The execution will be repeated as long as this condition is fulfilled.max_iterations (int) – Maximum number of iterations per event. If this number is exceeded the execution is aborted.

- for_each(loop_object_name, array_name, path)¶

Similar to

add_path(), this will execute the givenpathat the current position, but in each event it will execute it once for each object in the given StoreArrayarrayName. It will create a StoreObject namedloop_object_nameof same type as array which will point to each element in turn for each execution.This has the effect of calling the

event()methods of modules inpathfor each entry inarrayName.The main use case is to use it after using the

RestOfEventBuilderon aParticeList, where you can use this feature to perform actions on only a part of the event for a given list of candidates:>>> path.for_each('RestOfEvent', 'RestOfEvents', roe_path)

You can read this as

“for each

RestOfEventin the array of “RestOfEvents”, executeroe_path”For example, if ‘RestOfEvents’ contains two elements then

roe_pathwill be executed twice and during the execution a StoreObjectPtr ‘RestOfEvent’ will be available, which will point to the first element in the first execution, and the second element in the second execution.See also

A working example of this

for_eachRestOfEvent is to build a veto against photons from \(\pi^0\to\gamma\gamma\). It is described in How to Veto.Note

This feature is used by both the Flavor Tagger and Full event interpretation algorithms.

Changes to existing arrays / objects will be available to all modules after the

for_each(), including those made to the loop object itself (it will simply modify the i’th item in the array looped over.)StoreArrays / StoreObjects (of event durability) created inside the loop will be removed at the end of each iteration. So if you create a new particle list inside a

for_each()path execution the particle list will not exist for the next iteration or after thefor_each()is complete.- Parameters

loop_object_name (str) – The name of the object in the datastore during each execution

array_name (str) – The name of the StoreArray to loop over where the i-th element will be available as

loop_object_nameduring the i-th execution ofpathpath (basf2.Path) – The path to execute for each element in

array_name

- modules()¶

Returns an ordered list of all modules in this path.

4.2. Logging¶

The Logging system of the Belle II Software is rather flexible and allows

extensive configurations. In the most simple case a call to

set_log_level is all that is needed to set the minimum severity of

messages to be printed. However in addition to this global log level one can

set the log level for specific packages and even for individual modules

separately. The existing log levels are defined as

- class basf2.LogLevel¶

Class for all possible log levels

- DEBUG¶

The lowest possible severity meant for expert only information and disabled by default. In contrast to all other log levels DEBUG messages have an additional numeric indication of their priority called the

debug_levelto allow for different levels of verbosity.The agreed values for

debug_levelare0-9 for user code. These numbers are reserved for user analysis code and may not be used by any part of basf2.

10-19 for analysis package code. The use case is that a user wants to debug problems in analysis jobs with the help of experts.

20-29 for simulation/reconstruction code.

30-39 for core framework code.

Note

The default maximum debug level which will be shown when running

basf2 --debugwithout any argument for--debugis 10

- INFO¶

Used for informational messages which are of use for the average user but not very important. Should be used very sparsely, everything which is of no interest to the average user should be a debug message.

- RESULT¶

Informational message which don’t indicate an error condition but are more important than a mere information. For example the calculated cross section or the output file name.

Deprecated since version release-01-00-00: use

INFOmessages instead

- WARNING¶

For messages which indicate something which is not correct but not fatal to the processing. This should not be used to make informational messages more prominent and they should not be ignored by the user but they are not critical.

- ERROR¶

For messages which indicate a clear error condition which needs to be recovered. If error messages are produced before event processing is started the processing will be aborted. During processing errors don’t lead to a stop of the processing but still indicate a problem.

- basf2.set_log_level(level)[source]¶

Sets the global log level which specifies up to which level the logging messages will be shown

- Parameters

level (basf2.LogLevel) – minimum severity of messages to be logged

- basf2.set_debug_level(level)[source]¶

Sets the global debug level which specifies up to which level the debug messages should be shown

- Parameters

level (int) – The debug level. The default value is 100

- basf2.logging¶

An instance of the

LogPythonInterfaceclass for fine grained control over all settings of the logging system.

4.2.1. Creating Log Messages¶

Log messages can be created in a very similar way in python and C++. You can

call one of the logging functions like B2INFO and supply the message as

string, for example

B2INFO("This is a log message of severity INFO")

In Python you can supply multiple arguments which will all be converted to string and concatenated to form the log message

for i in range(1,4):

B2INFO("This is log message number ", i)

which will produce

[INFO] This is log message number 1

[INFO] This is log message number 2

[INFO] This is log message number 3

This works almost the same way in C++ except that you need the << operator

to construct the log message from multiple parts

for(int i=1; i<4; ++i) {

B2INFO("This is log message " << i << " in C++");

}

Log Variables

New in version release-03-00-00.

However, the log system has an additional feature to include variable parts in a fixed message to simplify grouping of similar log messages: If a log message only differs by a number or detector name it is very hard to filter repeating messages. So we have log message variables which can be used to specify varying parts while having a fixed message.

In Python these can just be given as keyword arguments to the logging functions

B2INFO("This is a log message", number=3.14, text="some text")

In C++ this again almost works the same way but we need to specify the variables a bit more explicitly.

B2INFO("This is a log message" << LogVar("number", 3.14) << LogVar("text", "some text"));

In both cases the names of the variables can be chosen feely and the output should be something like

[INFO] This is a log message

number = 3.14

text = some text

Logging functions

To emit log messages from within Python we have these functions:

- basf2.B2DEBUG(debugLevel, message, *args, **kwargs)¶

Print a

DEBUGmessage. The first argument is thedebug_level. All additional positional arguments are converted to strings and concatenated to the log message. All keyword arguments are added to the function as Log Variables.

- basf2.B2INFO(message, *args, **kwargs)¶

Print a

INFOmessage. All additional positional arguments are converted to strings and concatenated to the log message. All keyword arguments are added to the function as Log Variables.

- basf2.B2RESULT(message, *args, **kwargs)¶

Print a

RESULTmessage. All additional positional arguments are converted to strings and concatenated to the log message. All keyword arguments are added to the function as Log Variables.Deprecated since version release-01-00-00: use

B2INFO()instead

- basf2.B2WARNING(message, *args, **kwargs)¶

Print a

WARNINGmessage. All additional positional arguments are converted to strings and concatenated to the log message. All keyword arguments are added to the function as Log Variables.

- basf2.B2ERROR(message, *args, **kwargs)¶

Print a

ERRORmessage. All additional positional arguments are converted to strings and concatenated to the log message. All keyword arguments are added to the function as Log Variables.

- basf2.B2FATAL(message, *args, **kwargs)¶

Print a

FATALmessage. All additional positional arguments are converted to strings and concatenated to the log message. All keyword arguments are added to the function as Log Variables.Note

This also exits the programm with an error and is guaranteed to not return.

The same functions are available in C++ as macros once you included <framework/logging/Logger.h>

4.2.2. The Logging Configuration Objects¶

The logging object provides a more fine grained control over the

settings of the logging system and should be used if more than just a global

log level should be changed

- class basf2.LogPythonInterface¶

Logging configuration (for messages generated from C++ or Python), available as a global

basf2.loggingobject in Python. See alsobasf2.set_log_level()andbasf2.set_debug_level().This class exposes a object called

loggingto the python interface. With this object it is possible to set all properties of the logging system directly in the steering file in a consistent manner This class also exposes theLogConfigclass as well as theLogLevelandLogInfoenums to make setting of properties more transparent by using the names and not just the values. To set or get the log level, one can simply do:>>> logging.log_level = LogLevel.FATAL >>> print("Logging level set to", logging.log_level) FATAL

This module also allows to send log messages directly from python to ease consistent error reporting throughout the framework

>>> B2WARNING("This is a warning message")

See also

For all features, see

b2logging.py- property abort_level¶

Attribute for setting/getting the

log levelat which to abort processing. Defaults toFATALbut can be set to a lower level in rare cases.

- add_console([(bool)enable_color]) → None :¶

Write log output to console. (In addition to existing outputs). If

enable_coloris not specified color will be enabled if supported

- add_file((str)filename[, (bool)append=False]) → None :¶

Write log output to given file. (In addition to existing outputs)nn”

- add_json([(bool)complete_info=False]) → None :¶

Write log output to console, but format log messages as json objects for simplified parsing by other tools. Each log message will be printed as a one line JSON object.

New in version release-03-00-00.

- Parameters

complete_info (bool) – If this is set to True the complete log information is printed regardless of the

LogInfosetting.

See also

- add_udp((str)hostname, (int)port) → None :¶

Send the log output as a JSON object to the given hostname and port via UDP.

New in version release-04-00-00.

- Parameters

See also

- property debug_level¶

Attribute for getting/setting the debug level. If debug messages are enabled, their level needs to be at least this high to be printed. Defaults to 100.

- property enable_escape_newlines¶

Enable or disable escaping of newlines in log messages to the console. If this is set to true than any newline character in log messages printed to the console will be replaced by a “n” to ensure that every log messages fits exactly on one line.

New in version release-04-02-00.

- property enable_python_logging¶

Enable or disable logging via python. If this is set to true than log messages will be sent via

sys.stdout. This is probably slightly slower but is useful when running in jupyter notebooks or when trying to redirect stdout in python to a buffer. This setting affects all log connections to the console.New in version release-03-00-00.

- enable_summary((bool)on) → None :¶

Enable or disable the error summary printed at the end of processing. Expects one argument whether or not the summary should be shown

- get_info((LogLevel)log_level) → int :¶

Get info to print for given log level.

- Parameters

log_level (basf2.LogLevel) – Log level for which to get the display info

- property log_level¶

Attribute for setting/getting the current

log level. Messages with a lower level are ignored.

- property log_stats¶

Returns dictionary with message counters.

- property max_repetitions¶

Set the maximum amount of times log messages with the same level and message text (excluding variables) will be repeated before it is suppressed. Suppressed messages will still be counted but not shown for the remainder of the processing.

This affects messages with the same text but different ref:Log Variables. If the same log message is repeated frequently with different variables all of these will be suppressed after the given amount of repetitions.

New in version release-05-00-00.

- package((str)package) → LogConfig :¶

Get the

LogConfigfor given package to set detailed logging pararameters for this package.>>> logging.package('svd').debug_level = 10 >>> logging.package('svd').set_info(LogLevel.INFO, LogInfo.LEVEL | LogInfo.MESSAGE | LogInfo.FILE)

- reset() → None :¶

Remove all configured logging outputs. You can then configure your own via

add_file()oradd_console()

- set_info((LogLevel)log_level, (int)log_info) → None :¶

Set info to print for given log level. Should be an OR combination of

basf2.LogInfoconstants. As an example, to show only the level and text for all debug messages one could use>>> basf2.logging.set_info(basf2.LogLevel.DEBUG, basf2.LogInfo.LEVEL | basf2.LogInfo.MESSAGE)

- Parameters

log_level (LogLevel) – log level for which to set the display info

log_info (int) – Bitmask of

basf2.LogInfoconstants.

- set_package((str)package, (LogConfig)config) → None :¶

Set

basf2.LogConfigfor given package, see alsopackage().

- static terminal_supports_colors() → bool :¶

Returns true if the terminal supports colored output

- zero_counters() → None :¶

Reset the per-level message counters.

- class basf2.LogConfig¶

Defines logging settings (log levels and items included in each message) for a certain context, e.g. a module or package.

See also

- property abort_level¶

set or get the severity which causes program abort

- property debug_level¶

set or get the current debug level

- get_info((LogLevel)log_level) → int :¶

get the current bitmask of which parts of the log message will be printed for a given log level

- property log_level¶

set or get the current log level

- set_abort_level((LogLevel)abort_level) → None :¶

Set the severity which causes program abort.

This can be set to a

LogLevelwhich will cause the processing to be aborted if a message with the given level or higher is encountered. The default isFATAL. It cannot be set any higher but can be lowered.

- set_debug_level((int)debug_level) → None :¶

Set the maximum debug level to be shown. Any messages with log level

DEBUGand a larger debug level will not be shown.

- set_info((LogLevel)log_level, (int)log_info) → None :¶

set the bitmask of LogInfo members to show when printing messages for a given log level

- set_log_level((LogLevel)log_level) → None :¶

Set the minimum log level to be shown. Messages with a log level below this value will not be shown at all.

- class basf2.LogInfo¶

The different fields of a log message.

These fields can be used as a bitmask to configure the appearance of log messages.

- LEVEL¶

The severity of the log message, one of

basf2.LogLevel

- MESSAGE¶

The actual log message

- MODULE¶

The name of the module active when the message was emitted. Can be empty if no module was active (before/after processing or outside of the normal event loop)

- PACKAGE¶

The package the code that emitted the message belongs to. This is empty for messages emitted by python scripts

- FUNCTION¶

The function name that emitted the message

- FILE¶

The filename containing the code emitting the message

- LINE¶

The line number in the file emitting the message

4.3. Module Statistics¶

The basf2 software takes extensive statistics during event processing about the

memory consumption and execution time of all modules. For most users a simple

print of the statistics object will be enough and creates a text table of the

execution times and memory conumption:

import basf2

print(basf2.statistics)

However the statistics object provides full access to all the separate values

directly in python if needed. See

module_statistics.py for a full example.

Note

The memory consumption is measured by looking into /proc/PID/statm

between execution calls so for short running modules this might not be

accurate

but it should give a general idea.

- basf2.statistics¶

Global instance of a

ProcessStatisticsobject containing all the statistics

- class basf2.ProcessStatistics¶

Interface for retrieving statistics about module execution at runtime or after

basf2.process()returns. Should be accessed through a global instancebasf2.statistics.Statistics for

event()calls are available as a string representation of the object:>>> from basf2 import statistics >>> print(statistics) ================================================================================= Name | Calls | Memory(MB) | Time(s) | Time(ms)/Call ================================================================================= RootInput | 101 | 0 | 0.01 | 0.05 +- 0.02 RootOutput | 100 | 0 | 0.02 | 0.20 +- 0.87 ProgressBar | 100 | 0 | 0.00 | 0.00 +- 0.00 ================================================================================= Total | 101 | 0 | 0.03 | 0.26 +- 0.86 =================================================================================

This provides information on the number of calls, elapsed time, and the average difference in resident memory before and after the

event()call.Note

The module responsible for reading (or generating) events usually has one additional event() call which is used to determine whether event processing should stop.

Warning

Memory consumption is reporting the difference in memory usage as reported by the kernel before and after the call. This is not the maximum memory the module has consumed. Negative values indicate that this module has freed momemory which was allocated in other modules or function calls.

Information on other calls like

initialize(),terminate(), etc. are also available through the different counters defined inStatisticCounters:>>> print(statistics(statistics.INIT)) >>> print(statistics(statistics.BEGIN_RUN)) >>> print(statistics(statistics.END_RUN)) >>> print(statistics(statistics.TERM))

- class ModuleStatistics¶

Execution statistics for a single module. All member functions take exactly one argument to select which counter to query which defaults to

StatisticCounters.TOTALif omitted.- __reduce__()¶

Helper for pickle.

- calls(counter=StatisticCounters.TOTAL)¶

Return the total number of calls

- memory_mean(counter=StatisticCounters.TOTAL)¶

Return the mean of the memory usage

- memory_stddev(counter=StatisticCounters.TOTAL)¶

Return the standard deviation of the memory usage

- memory_sum(counter=StatisticCounters.TOTAL)¶

Return the sum of the total memory usage

- property name¶

property to get the name of the module to be displayed in the statistics

- time_mean(counter=StatisticCounters.TOTAL)¶

Return the mean of all execution times

- time_memory_corr(counter=StatisticCounters.TOTAL)¶

Return the correlaction factor between time and memory consumption

- time_stddev(counter=StatisticCounters.TOTAL)¶

Return the standard deviation of all execution times

- time_sum(counter=StatisticCounters.TOTAL)¶

Return the sum of all execution times

- class StatisticCounters¶

Available types of statistic counters (corresponds to Module functions)

- INIT¶

Time spent or memory used in the

initialize()function- BEGIN_RUN¶

Time spent or memory used in the

beginRun()function- EVENT¶

Time spent or memory used in the

event()function- END_RUN¶

Time spent or memory used in the

endRun()function- TERM¶

Time spent or memory used in the

terminate()function- TOTAL¶

Time spent or memory used in any module function. This is the sum of all of the above.

- __call__(counter=StatisticCounters.EVENT, modules=None)¶

Calling the statistics object directly like a function will return a string with the execution statistics in human readable form.

- Parameters

counter (StatisticCounters) – Which counter to use

modules (list[Module]) – A list of modules to include in the returned string. If omitted the statistics for all modules will be included.

print the

beginRun()statistics for all modules:>>> print(statistics(statistics.BEGIN_RUN))

print the total execution times and memory consumption but only for the modules

module1andmodule2>>> print(statistics(statistics.TOTAL, [module1, module2]))

print the event statistics (default) for only two modules

>>> print(statistics(modules=[module1, module2]))

- __str__()¶

Return the event statistics as a string in a human readable form

- clear() → None :¶

Clear collected statistics but keep names of modules

- get((Module)module) → ModuleStatistics :¶

Get

ModuleStatisticsfor given Module.

- get_global() → ModuleStatistics :¶

Get global

ModuleStatisticscontaining total elapsed time etc.

- property modules¶

List of all

ModuleStatisticsobjects.

4.4. Conditions Database¶

The conditions database is the place where we store additional data needed to interpret and analyse the data that can change over time, for example the detector configuration or calibration constants.

In many cases it should not be necessary to change the configuration but except for

maybe adding an extra globaltag to the list via conditions.globaltags

4.5. Additional Functions¶

- basf2.find_file((str)filename[, (str)data_type=''[, (bool)silent=False]]) → str :¶

Try to find a file and return its full path

If

data_typeis empty this function will try to find the filein

$BELLE2_LOCAL_DIR,in

$BELLE2_RELEASE_DIRrelative to the current working directory.

Other known

data_typevalues areexamplesExample data for examples and tutorials. Will try to find the file

in

$BELLE2_EXAMPLES_DATA_DIRrelative to the current working directory

validationData for Validation purposes. Will try to find the file in

in

$BELLE2_VALIDATION_DATA_DIRrelative to the current working directory

New in version release-03-00-00.

- Parameters

filename (str) – relative filename to look for, either in a central place or in the current working directory

data_type (str) – case insensitive data type to find. Either empty string or one of

"examples"or"validation"silent (bool) – If True don’t print any errors and just return an empty string if the file cannot be found

- basf2.get_file_metadata((str)arg1) → object :¶

Return the FileMetaData object for the given output file.

- basf2.get_random_seed() → str :¶

Return the current random seed

- basf2.log_to_console(color=False)[source]¶

Adds the standard output stream to the list of logging destinations. The shell logging destination is added to the list by the framework by default.

- basf2.log_to_file(filename, append=False)[source]¶

Adds a text file to the list of logging destinations.

- Parameters

filename – The path and filename of the text file

append – Should the logging system append the messages to the end of the file (True) or create a new file for each event processing session (False). Default is False.

- basf2.set_nprocesses((int)arg1) → None :¶

Sets number of worker processes for parallel processing.

Can be overridden using the

-pargument to basf2.Note

Setting this to 1 will have one parallel worker job which is almost always slower than just running without parallel processing but is still provided to allow debugging of parallel execution.

- Parameters

nproc (int) – number of worker processes. 0 to disable parallel processing.

- basf2.set_random_seed((object)seed) → None :¶

Set the random seed. The argument can be any object and will be converted to a string using the builtin str() function and will be used to initialize the random generator.

- basf2.set_streamobjs((list)arg1) → None :¶

Set the names of all DataStore objects which should be sent between the parallel processes. This can be used to improve parallel processing performance by removing objects not required.

4.6. Other Modules¶

There more tools available in the software framework which might not be of general interest to all users and are separated into different python modules:

- 4.6.1. basf2.utils - Helper functions for printing basf2 objects

- 4.6.2. basf2.pickle_path - Functions necessary to pickle and unpickle a Path

- 4.6.3. B2Tools

- 4.6.4. b2test_utils - Helper functions useful for test scripts

- 4.6.5. conditions_db

- 4.6.6. conditions_db.iov

- 4.6.7. hep_ipython_tools

- 4.6.8. iov_conditional - Functions to Execute Paths Depending on Experiment Phases

- 4.6.9. pdg - access particle definitions

- 4.6.10. rundb - Helper classes for retrieving information from the RunDB

- 4.6.11. terminal_utils - Helper functions for input from/output to a terminal