|

Belle II Software development

|

|

Belle II Software development

|

Classes | |

| class | HTCondorResult |

Public Member Functions | |

| get_batch_submit_script_path (self, job) | |

| can_submit (self, njobs=1) | |

| condor_q (cls, class_ads=None, job_id="", username="") | |

| condor_history (cls, class_ads=None, job_id="", username="") | |

| submit (self, job, check_can_submit=True, jobs_per_check=100) | |

| get_submit_script_path (self, job) | |

Public Attributes | |

| int | global_job_limit = self.default_global_job_limit |

| The active job limit. | |

| int | sleep_between_submission_checks = self.default_sleep_between_submission_checks |

| Seconds we wait before checking if we can submit a list of jobs. | |

| dict | backend_args = {**self.default_backend_args, **backend_args} |

| The backend args that will be applied to jobs unless the job specifies them itself. | |

Static Public Attributes | |

| str | batch_submit_script = "submit.sub" |

HTCondor batch script (different to the wrapper script of Backend.submit_script) | |

| list | default_class_ads = ["GlobalJobId", "JobStatus", "Owner"] |

| Default ClassAd attributes to return from commands like condor_q. | |

| list | submission_cmds = [] |

| Shell command to submit a script, should be implemented in the derived class. | |

| int | default_global_job_limit = 1000 |

| Default global limit on the total number of submitted/running jobs that the user can have. | |

| int | default_sleep_between_submission_checks = 30 |

| Default time betweeon re-checking if the active jobs is below the global job limit. | |

| str | submit_script = "submit.sh" |

| Default submission script name. | |

| str | exit_code_file = "__BACKEND_CMD_EXIT_STATUS__" |

| Default exit code file name. | |

| dict | default_backend_args = {} |

| Default backend_args. | |

Protected Member Functions | |

| _make_submit_file (self, job, submit_file_path) | |

| _add_batch_directives (self, job, batch_file) | |

| _create_cmd (self, script_path) | |

| _submit_to_batch (cls, cmd) | |

| _create_job_result (cls, job, job_id) | |

| _create_parent_job_result (cls, parent) | |

| _ (self, job, check_can_submit=True, jobs_per_check=100) | |

| _ (self, job, check_can_submit=True, jobs_per_check=100) | |

| _ (self, jobs, check_can_submit=True, jobs_per_check=100) | |

| _add_wrapper_script_setup (self, job, batch_file) | |

| _add_wrapper_script_teardown (self, job, batch_file) | |

Static Protected Member Functions | |

| _add_setup (job, batch_file) | |

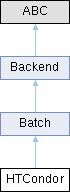

Backend for submitting calibration processes to a HTCondor batch system.

Definition at line 1924 of file backends.py.

|

protectedinherited |

Submit method of Batch backend for a `SubJob`. Should take `SubJob` object, create needed directories, create batch script, and send it off with the batch submission command. It should apply the correct options (default and user requested). Should set a Result object as an attribute of the job.

Definition at line 1211 of file backends.py.

|

protectedinherited |

Submit method of Batch backend. Should take job object, create needed directories, create batch script, and send it off with the batch submission command, applying the correct options (default and user requested.) Should set a Result object as an attribute of the job.

Definition at line 1247 of file backends.py.

|

protectedinherited |

Submit method of Batch Backend that takes a list of jobs instead of just one and submits each one.

Definition at line 1298 of file backends.py.

|

protected |

For HTCondor leave empty as the directives are already included in the submit file.

Reimplemented from Batch.

Definition at line 1971 of file backends.py.

|

staticprotectedinherited |

Adds setup lines to the shell script file.

Definition at line 806 of file backends.py.

|

protectedinherited |

Adds lines to the submitted script that help with job monitoring/setup. Mostly here so that we can insert `trap` statements for Ctrl-C situations.

Definition at line 813 of file backends.py.

|

protectedinherited |

Adds lines to the submitted script that help with job monitoring/teardown. Mostly here so that we can insert an exit code of the job cmd being written out to a file. Which means that we can know if the command was successful or not even if the backend server/monitoring database purges the data about our job i.e. If PBS removes job information too quickly we may never know if a job succeeded or failed without some kind of exit file.

Definition at line 838 of file backends.py.

|

protected |

Reimplemented from Batch.

Definition at line 1977 of file backends.py.

|

protected |

Reimplemented from Batch.

Definition at line 2121 of file backends.py.

|

protected |

We want to be able to call `ready()` on the top level `Job.result`. So this method needs to exist so that a Job.result object actually exists. It will be mostly empty and simply updates subjob statuses and allows the use of ready().

Reimplemented from Backend.

Definition at line 2128 of file backends.py.

|

protected |

Fill HTCondor submission file.

Reimplemented from Batch.

Definition at line 1945 of file backends.py.

|

protected |

Do the actual batch submission command and collect the output to find out the job id for later monitoring.

Reimplemented from Batch.

Definition at line 1991 of file backends.py.

| can_submit | ( | self, | |

| njobs = 1 ) |

Checks the global number of jobs in HTCondor right now (submitted or running) for this user.

Returns True if the number is lower that the limit, False if it is higher.

Parameters:

njobs (int): The number of jobs that we want to submit before checking again. Lets us check if we

are sufficiently below the limit in order to (somewhat) safely submit. It is slightly dangerous to

assume that it is safe to submit too many jobs since there might be other processes also submitting jobs.

So njobs really shouldn't be abused when you might be getting close to the limit i.e. keep it <=250

and check again before submitting more.

Reimplemented from Batch.

Definition at line 2131 of file backends.py.

| condor_history | ( | cls, | |

| class_ads = None, | |||

| job_id = "", | |||

| username = "" ) |

Simplistic interface to the ``condor_history`` command. lets you request information about all jobs matching the filters

``job_id`` and ``username``. Note that setting job_id negates username so it is ignored.

The result is a JSON dictionary filled by output of the ``-json`` ``condor_history`` option.

Parameters:

class_ads (list[str]): A list of condor_history ClassAds that you would like information about.

By default we give {cls.default_class_ads}, increasing the amount of class_ads increase the time taken

by the condor_q call.

job_id (str): String representation of the Job ID given by condor_submit during submission.

If this argument is given then the output of this function will be only information about this job.

If this argument is not given, then all jobs matching the other filters will be returned.

username (str): By default we return information about only the current user's jobs. By giving

a username you can access the job information of a specific user's jobs. By giving ``username='all'`` you will

receive job information from all known user jobs matching the other filters. This is limited to 10000 records

and isn't recommended.

Returns:

dict: JSON dictionary of the form:

.. code-block:: python

{

"NJOBS":<number of records returned by command>,

"JOBS":[

{

<ClassAd: value>, ...

}, ...

]

}

Definition at line 2222 of file backends.py.

| condor_q | ( | cls, | |

| class_ads = None, | |||

| job_id = "", | |||

| username = "" ) |

Simplistic interface to the `condor_q` command. lets you request information about all jobs matching the filters

'job_id' and 'username'. Note that setting job_id negates username so it is ignored.

The result is the JSON dictionary returned by output of the ``-json`` condor_q option.

Parameters:

class_ads (list[str]): A list of condor_q ClassAds that you would like information about.

By default we give {cls.default_class_ads}, increasing the amount of class_ads increase the time taken

by the condor_q call.

job_id (str): String representation of the Job ID given by condor_submit during submission.

If this argument is given then the output of this function will be only information about this job.

If this argument is not given, then all jobs matching the other filters will be returned.

username (str): By default we return information about only the current user's jobs. By giving

a username you can access the job information of a specific user's jobs. By giving ``username='all'`` you will

receive job information from all known user jobs matching the other filters. This may be a LOT of jobs

so it isn't recommended.

Returns:

dict: JSON dictionary of the form:

.. code-block:: python

{

"NJOBS":<number of records returned by command>,

"JOBS":[

{

<ClassAd: value>, ...

}, ...

]

}

Definition at line 2155 of file backends.py.

| get_batch_submit_script_path | ( | self, | |

| job ) |

Construct the Path object of the .sub file that we will use to describe the job.

Reimplemented from Batch.

Definition at line 1984 of file backends.py.

|

inherited |

Construct the Path object of the bash script file that we will submit. It will contain the actual job command, wrapper commands, setup commands, and any batch directives

Definition at line 859 of file backends.py.

|

inherited |

Reimplemented from Backend.

Definition at line 1204 of file backends.py.

|

inherited |

The backend args that will be applied to jobs unless the job specifies them itself.

Definition at line 796 of file backends.py.

|

static |

HTCondor batch script (different to the wrapper script of Backend.submit_script)

Definition at line 1929 of file backends.py.

|

staticinherited |

Default backend_args.

Definition at line 788 of file backends.py.

|

static |

Default ClassAd attributes to return from commands like condor_q.

Definition at line 1943 of file backends.py.

|

staticinherited |

Default global limit on the total number of submitted/running jobs that the user can have.

This limit will not affect the total number of jobs that are eventually submitted. But the jobs won't actually be submitted until this limit can be respected i.e. until the number of total jobs in the Batch system goes down. Since we actually submit in chunks of N jobs, before checking this limit value again, this value needs to be a little lower than the real batch system limit. Otherwise you could accidentally go over during the N job submission if other processes are checking and submitting concurrently. This is quite common for the first submission of jobs from parallel calibrations.

Note that if there are other jobs already submitted for your account, then these will count towards this limit.

Definition at line 1155 of file backends.py.

|

staticinherited |

Default time betweeon re-checking if the active jobs is below the global job limit.

Definition at line 1157 of file backends.py.

|

staticinherited |

Default exit code file name.

Definition at line 786 of file backends.py.

|

inherited |

The active job limit.

This is 'global' because we want to prevent us accidentally submitting too many jobs from all current and previous submission scripts.

Definition at line 1166 of file backends.py.

|

inherited |

Seconds we wait before checking if we can submit a list of jobs.

Only relevant once we hit the global limit of active jobs, which is a lot usually.

Definition at line 1169 of file backends.py.

|

staticinherited |

Shell command to submit a script, should be implemented in the derived class.

Definition at line 1142 of file backends.py.

|

staticinherited |

Default submission script name.

Definition at line 784 of file backends.py.