|

Belle II Software development

|

|

Belle II Software development

|

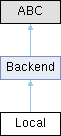

Classes | |

| class | LocalResult |

Public Member Functions | |

| __init__ (self, *, backend_args=None, max_processes=1) | |

| join (self) | |

| max_processes (self) | |

| max_processes (self, value) | |

| submit (self, job) | |

| get_submit_script_path (self, job) | |

Static Public Member Functions | |

| run_job (name, working_dir, output_dir, script) | |

Public Attributes | |

| pool = None | |

The actual Pool object of this instance of the Backend. | |

| max_processes = max_processes | |

| The size of the multiprocessing process pool. | |

| dict | backend_args = {**self.default_backend_args, **backend_args} |

| The backend args that will be applied to jobs unless the job specifies them itself. | |

Static Public Attributes | |

| str | submit_script = "submit.sh" |

| Default submission script name. | |

| str | exit_code_file = "__BACKEND_CMD_EXIT_STATUS__" |

| Default exit code file name. | |

| dict | default_backend_args = {} |

| Default backend_args. | |

Protected Member Functions | |

| _ (self, job) | |

| _ (self, job) | |

| _ (self, jobs) | |

| _create_parent_job_result (cls, parent) | |

| _add_wrapper_script_setup (self, job, batch_file) | |

| _add_wrapper_script_teardown (self, job, batch_file) | |

Static Protected Member Functions | |

| _add_setup (job, batch_file) | |

Protected Attributes | |

| _max_processes = value | |

| Internal attribute of max_processes. | |

Backend for local processes i.e. on the same machine but in a subprocess.

Note that you should call the self.join() method to close the pool and wait for any

running processes to finish before exiting the process. Once you've called join you will have to set up a new

instance of this backend to create a new pool. If you don't call `Local.join` or don't create a join yourself

somewhere, then the main python process might end before your pool is done.

Keyword Arguments:

max_processes (int): Integer that specifies the size of the process pool that spawns the subjobs, default=1.

It's the maximum simultaneous subjobs.

Try not to specify a large number or a number larger than the number of cores.

It won't crash the program but it will slow down and negatively impact performance.

Definition at line 923 of file backends.py.

| __init__ | ( | self, | |

| * | , | ||

| backend_args = None, | |||

| max_processes = 1 ) |

Definition at line 939 of file backends.py.

|

protected |

Submission of a `SubJob` for the Local backend

Definition at line 1023 of file backends.py.

|

protected |

Submission of a `Job` for the Local backend

Definition at line 1054 of file backends.py.

|

protected |

Submit method of Local() that takes a list of jobs instead of just one and submits each one.

Definition at line 1096 of file backends.py.

|

staticprotectedinherited |

Adds setup lines to the shell script file.

Definition at line 806 of file backends.py.

|

protectedinherited |

Adds lines to the submitted script that help with job monitoring/setup. Mostly here so that we can insert `trap` statements for Ctrl-C situations.

Definition at line 813 of file backends.py.

|

protectedinherited |

Adds lines to the submitted script that help with job monitoring/teardown. Mostly here so that we can insert an exit code of the job cmd being written out to a file. Which means that we can know if the command was successful or not even if the backend server/monitoring database purges the data about our job i.e. If PBS removes job information too quickly we may never know if a job succeeded or failed without some kind of exit file.

Definition at line 838 of file backends.py.

|

protected |

We want to be able to call `ready()` on the top level `Job.result`. So this method needs to exist so that a Job.result object actually exists. It will be mostly empty and simply updates subjob statuses and allows the use of ready().

Reimplemented from Backend.

Definition at line 1132 of file backends.py.

|

inherited |

Construct the Path object of the bash script file that we will submit. It will contain the actual job command, wrapper commands, setup commands, and any batch directives

Definition at line 859 of file backends.py.

| join | ( | self | ) |

Closes and joins the Pool, letting you wait for all results currently still processing.

Definition at line 984 of file backends.py.

| max_processes | ( | self | ) |

Getter for max_processes

Definition at line 995 of file backends.py.

| max_processes | ( | self, | |

| value ) |

Setter for max_processes, we also check for a previous Pool(), wait for it to join and then create a new one with the new value of max_processes

Definition at line 1002 of file backends.py.

|

static |

The function that is used by multiprocessing.Pool.apply_async during process creation. This runs a shell command in a subprocess and captures the stdout and stderr of the subprocess to files.

Definition at line 1106 of file backends.py.

| submit | ( | self, | |

| job ) |

Reimplemented from Backend.

Definition at line 1016 of file backends.py.

|

protected |

Internal attribute of max_processes.

Definition at line 1008 of file backends.py.

|

inherited |

The backend args that will be applied to jobs unless the job specifies them itself.

Definition at line 796 of file backends.py.

|

staticinherited |

Default backend_args.

Definition at line 788 of file backends.py.

|

staticinherited |

Default exit code file name.

Definition at line 786 of file backends.py.

| max_processes = max_processes |

The size of the multiprocessing process pool.

Definition at line 946 of file backends.py.

| pool = None |

The actual Pool object of this instance of the Backend.

Definition at line 944 of file backends.py.

|

staticinherited |

Default submission script name.

Definition at line 784 of file backends.py.