Conditions Database

Contents

5.4. Conditions Database#

The conditions database is the place where we store additional data needed to interpret and analyse the data that can change over time, for example the detector configuration or calibration constants.

In many cases it should not be necessary to change the configuration but except for

maybe adding an extra globaltag to the list via conditions.globaltags

5.4.1. Conditions Database Overview#

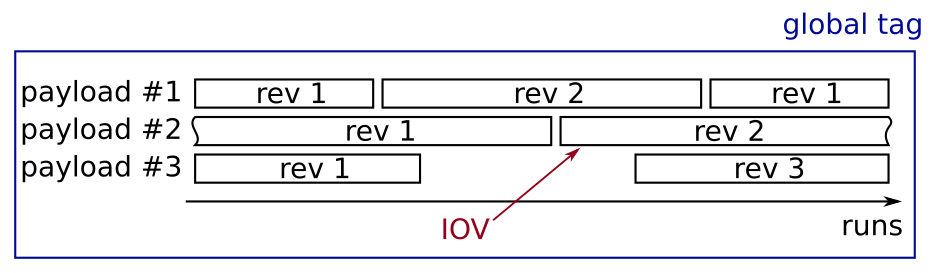

The conditions database consists of binary objects (usually ROOT files) which are identified by a name and a revision number. These objects are called payloads and can have one or more intervals of validity (iov) which is the experiment and run range this object is deemed to be valid. A collection of payloads and iovs for a certain dataset is called a globaltag and is identified by a unique name.

- Payload

An atom of conditions data identified by name and revision number

usually ROOT files containing custom objects

a payload cannot be modified after creation.

- Interval of Validity (iov)

An experiment and run interval for which a payload should be valid

consists of four values:

first_exp,first_run,final_exp,final_runit is still valid for the final run (inclusive end)

final_exp>=0 && final_run<0: valid for all runs infinal_expfinal_exp <0 && final_run<0: valid forever, starting withfist_exp, first_run

- Globaltag:

A collection of payloads and iovs

has a unique name and a description

can have gaps and overlaps, a payload is not guaranteed to be present for all possible runs.

are not necessarily valid for all data

are not valid for all software versions

are immutable once “published”

can be “invalidated”

Fig. 5.2 Schematic display of a globaltag: Different payloads with different revisions are valid for a certain set of runs.#

Once a globaltag is prepared it is usually immutable and cannot be modified any further to ensure reproducibility of analyses: different processing iterations will use different globaltags. The only exceptions are the globaltag used online during data taking and for prompt processing of data directly after data taking: These two globaltags are flagged as running and information for new runs is constantly added to them as more data becomes available.

All globaltags to be used in analysis need to be fixed. Globaltags which are not fixed, i.e. “OPEN”, cannot be used for data processing. Otherwise it is not possible to know exactly which conditions were used. If a globaltag in the processing chain is in “OPEN” state processing will not be possible.

Changed in version release-04-00-00: Prior to release-04-00-00 no check was performed on the state of globaltags so all globaltags could be used in analysis

When processing data the software needs to know which payloads to use for which runs so the globaltag (or multiple globaltags) to be used needs to be specified. This is done automatically in most cases as the globaltag used to create the file will be used when processing, the so called globaltag replay. However the user can specify additional globaltags to use in addition or to override the globaltag selection completely and disable globaltag replay.

Changed in version release-04-00-00: Prior to release-04 the globaltag needed to always be configured manually and no automatic replay was in place

If multiple globaltags are selected the software will look for all necessary payloads in each of them in turn and always take each payload from the first globaltag it can be found in.

Tip

A set of tools is provided with basf2 for interacting with the conditions

database via command line and operating actions such as creating globaltags,

uploading payloads, etc. Such tools, called b2conditionsdb tools, are

properly documented in b2conditionsdb.

5.4.2. Configuring the Conditions Database#

By default the software will look for conditions data in

user globaltags: Look in all globaltags provided by the user by setting

conditions.globaltagsglobaltag replay: Look in different base globaltags depending on the use case:

Processing existing files (i.e. mdst): the globaltags specified in the input file are used.

Generating and events: the default globaltags for the current software version (

conditions.default_globaltagsare used.

The globaltag replay can be disabled by calling conditions.override_globaltags()

If multiple files are processed all need to have the same globaltags specified,

otherwise processing cannot continue unless globaltag replay is disabled.

There are more advanced settings available via the conditions

object which should not be needed by most users, like setting the URL to the central

database or where to look for previously downloaded payloads.

Changed in version release-04-00-00: In release-04 the configuration interface has been changed completely to

before. Now all settings are grouped via the conditions object.

- basf2.conditions#

Global instance of a

basf2.ConditionsConfigurationobject containing all he settings relevant for the conditions database

- class basf2.ConditionsConfiguration#

This class contains all configurations for the conditions database service

which globaltags to use

where to look for payload information

where to find the actual payload files

which temporary testing payloads to use

But for most users the only thing they should need to care about is to set the list of additional

globaltagsto use.- append_globaltag(name)#

Append a globaltag to the end of the

globaltagslist. That means it will be the lowest priority of all tags in the list.

- append_testing_payloads(filename)#

Append a text file containing local test payloads to the end of the list of

testing_payloads. This will mean they will have lower priority than payloads in previously defined text files but still higher priority than globaltags.- Parameters

filename (str) – file containing a local definition of payloads and their intervals of validity for testing

Warning

This functionality is strictly for testing purposes. Using local payloads leads to results which cannot be reproduced by anyone else and thus cannot be published.

- property default_globaltags#

A tuple containing the default globaltags to be used if events are generated without an input file.

- property default_metadata_provider_url#

URL of the default central metadata provider to look for payloads in the conditions database.

- disable_globaltag_replay()#

“disable_globaltag_replay()

Disable global tag replay and revert to the old behavior that the default globaltag will be used if no other globaltags are specified.

This is a shortcut to just calling

>>> conditions.override_globaltags() >>> conditions.globaltags += list(conditions.default_globaltags)

- expert_settings(**kwargs)#

Set some additional settings for the conditions database.

You can supply any combination of keyword-only arguments defined below. The function will return a dictionary containing all current settings.

>>> conditions.expert_settings(connection_timeout=5, max_retries=1) {'save_payloads': 'localdb/database.txt', 'download_cache_location': '', 'download_lock_timeout': 120, 'usable_globaltag_states': {'PUBLISHED', 'RUNNING', 'TESTING', 'VALIDATED'}, 'connection_timeout': 5, 'stalled_timeout': 60, 'max_retries': 1, 'backoff_factor': 5}

Warning

Modification of these parameters should not be needed, in rare circumstances this could be used to optimize access for many jobs at once but should only be set by experts.

- Parameters

save_payloads (str) – Where to store new payloads created during processing. This should be a filename to contain the payload information and the payload files will be placed in the same directory as the file.

download_cache_location (str) – Where to store payloads which have been downloaded from the central server. This could be a user defined directory, otherwise empty string defaults to

$TMPDIR/basf2-conditionswhere$TMPDIRis the temporary directories defined in the system. Newly downloaded payloads will be stored in this directory in a hashed structure, seepayload_locationsdownload_lock_timeout (int) – How many seconds to wait for a write lock when concurrently downloading the same payload between different processes. If locking fails the payload will be downloaded to a temporary file separately for each process.

usable_globaltag_states (set(str)) – Names of globaltag states accepted for processing. This can be changed to make sure that only fully published globaltags are used or to enable running on an open tag. It is not possible to allow usage of ‘INVALID’ tags, those will always be rejected.

connection_timeout (int) – timeout in seconds before connection should be aborted. 0 sets the timeout to the default (300s)

stalled_timeout (int) – timeout in seconds before a download should be aborted if the speed stays below 10 KB/s, 0 disables this timeout

max_retries (int) – maximum amount of retries if the server responded with an HTTP response of 500 or more. 0 disables retrying

backoff_factor (int) – backoff factor for retries in seconds. Retries are performed using something similar to binary backoff: For retry \(n\) and a

backoff_factor\(f\) we wait for a random time chosen uniformely from the interval \([1, (2^{n} - 1) \times f]\) in seconds.

- property globaltags#

List of globaltags to be used. These globaltags will be the ones with highest priority but by default the globaltags used to create the input files or the default globaltag will also be used.

The priority of the globaltags in this list is highest first. So the first in the list will be checked first and all other globaltags will only be checked for payloads not found so far.

Warning

By default this list contains the globaltags to be used in addition to the ones from the input file or the default one if no input file is present. If this is not desirable you need to call

override_globaltags()to disable any addition or modification of this list.

- property metadata_providers#

List of metadata providers to use when looking for payload metadata. There are currently two supported providers:

Central metadata provider to look for payloads in the central conditions database. This provider is used for any entry in this list which starts with

http(s)://. The URL should point to the top level of the REST api endpoints on the serverLocal metadata provider to look for payloads in a local SQLite snapshot taken from the central server. This provider will be assumed for any entry in this list not starting with a protocol specifier or if the protocol is given as

file://

This list should rarely need to be changed. The only exception is for users who want to be able to use the software without internet connection after they downloaded a snapshot of the necessary globaltags with

b2conditionsdb downloadto point to this location.

- property override_enabled#

Indicator whether or not the override of globaltags is enabled. If true then globaltags present in input files will be ignored and only the ones given in

globaltagswill be considered.

- override_globaltags(list=None)#

Enable globaltag override. This disables all modification of the globaltag list at the beginning of processing:

the default globaltag or the input file globaltags will be ignored.

any callback set with

set_globaltag_callbackwill be ignored.the list of

globaltagswill be used exactly as it is.

Warning

it’s still possible to modify

globaltagsafter this call.

- property payload_locations#

List of payload locations to search for payloads which have been found by any of the configured

metadata_providers. This can be a local directory or ahttp(s)://url pointing to the payload directory on a server.For remote locations starting with

http(s)://we assume that the layout of the payloads on the server is the same as on the main payload server: The combination of given location and the relative url in the payload metadata fieldpayloadUrlshould point to the correct payload on the server.For local directories, two layouts are supported and will be auto detected:

- flat

All payloads are in the same directory without any substructure with the name

dbstore_{name}_rev_{revision}.root- hashed

All payloads are stored in subdirectories in the form

AB/{name}_r{revision}.rootwhereAandBare the first two characters of the md5 checksum of the payload file.

Example

Given

payload_locations = ["payload_dir/", "http://server.com/payloads"]the framework would look for a payload with nameBeamParametersin revision45(and checksuma34ce5...) in the following placespayload_dir/a3/BeamParameters_r45.rootpayload_dir/dbstore_BeamParameters_rev_45.roothttp://server.com/payloads/dbstore/BeamParameters/dbstore_BeamParameters_rev_45.rootgiven the usual pattern of thepayloadUrlmetadata. But this could be changed on the central servers so mirrors should not depend on this convention but copy the actual structure of the central server.

If the payload cannot be found in any of the given locations the framework will always attempt to download it directly from the central server and put it in a local cache directory.

- prepend_globaltag(name)#

Add a globaltag to the beginning of the

globaltagslist. That means it will be the highest priority of all tags in the list.

- prepend_testing_payloads(filename)#

Insert a text file containing local test payloads in the beginning of the list of

testing_payloads. This will mean they will have higher priority than payloads in previously defined text files as well as higher priority than globaltags.- Parameters

filename (str) – file containing a local definition of payloads and their intervals of validity for testing

Warning

This functionality is strictly for testing purposes. Using local payloads leads to results which cannot be reproduced by anyone else and thus cannot be published.

- reset()#

Reset the conditions database configuration to its original state.

- set_globaltag_callback(function)#

Set a callback function to be called just before processing.

This callback can be used to further customize the globaltags to be used during processing. It will be called after the input files have been opened and checked with three keyword arguments:

- base_tags

The globaltags determined from either the input files or, if no input files are present, the default globaltags

- user_tags

The globaltags provided by the user

- metadata

If there are not input files (e.g. generating events) this argument is None. Otherwise it is a list of all the

FileMetaDatainstances from all input files. This list can be empty if there is no metadata associated with the input files.

From this information the callback function should then compose the final list of globaltags to be used for processing and return this list. If

Noneis returned the default behavior is applied as if there were no callback function. If anything else is returned the processing is aborted.If no callback function is specified the default behavior is equivalent to

def callback(base_tags, user_tags, metadata): if not base_tags: basf2.B2FATAL("No baseline globaltags available. Please use override") return user_tags + base_tags

If

override_enabledisTruethen the callback function will not be called.Warning

If a callback is set it is responsible to select the correct list of globaltags and also make sure that all files are compatible. No further checks will be done by the framework but any list of globaltags which is returned will be used exactly as it is.

If the list of

base_tagsis empty that usually means that the input files had different globaltag settings but it is the responsibility of the callback to then verify if the list of globaltags is usable or not.If the callback function determines that no working set of globaltags can be determined then it should abort processing using a FATAL error or an exception

- property testing_payloads#

List of text files to look for local testing payloads. Each entry should be a text file containing local payloads and their intervals of validity to be used for testing.

Payloads found in these files and valid for the current run will have a higher priority than any of the

globaltags. If a valid payload is present in multiple files the first one in the list will have higher priority.Warning

This functionality is strictly for testing purposes. Using local payloads leads to results which cannot be reproduced by anyone else and thus cannot be published.

Configuration in C++#

You can also configure all of these settings in C++ when writing standalone tools using the conditions data. This works very similar to the configuration in python as all the settings are exposed by a single class

#include <framework/database/Configuration.h>

auto& config = Conditions::Configuration::getInstance();

config.prependGlobltag("some_tag");

Environment Variables#

These settings can be modified by setting environment variables

- BELLE2_CONDB_GLOBALTAG#

if set this overrides the default global tag returned by

conditions.default_globaltags. This can be a list of tags separated by white space in which case all global tags are searched.export BELLE2_CONDB_FALLBACK="defaultTag additionalTag"

Changed in version release-04-00-00: This setting doesn’t affect globaltag replay, only the default globaltag used when replay is disabled or events are generated.

Previously if set to an empty value access to the central database was disabled, now it will just set an empty list of default globaltags

- BELLE2_CONDB_SERVERLIST#

this environment variable can be set to a list of URLS to look for the conditions database. The urls will at the beginning of

conditions.metadata_providersIf

BELLE2_CONDB_METADATAis also set it takes precedence over this settingNew in version release-04-00-00.

- BELLE2_CONDB_METADATA#

a whitespace separated list of urls and sqlite files to look for payload information. Will be used to populate

conditions.metadata_providersNew in version release-04-00-00.

- BELLE2_CONDB_PAYLOADS#

a whitespace separated list of directories or urls to look for payload files. Will be used to populate

conditions.payload_locationsNew in version release-04-00-00.

- BELLE2_CONDB_PROXY#

Can be set to specify a proxy server to use for all connections to the central database. The parameter should be a string holding the host name or dotted numerical IP address. A numerical IPv6 address must be written within [brackets]. To specify port number in this string, append

:[port]to the end of the host name. If not specified, libcurl will default to using port 1080 for proxies. The proxy string should be prefixed with[scheme]://to specify which kind of proxy is used.- http

HTTP Proxy. Default when no scheme or proxy type is specified.

- https

HTTPS Proxy.

- socks4

SOCKS4 Proxy.

- socks4a

SOCKS4a Proxy. Proxy resolves URL hostname.

- socks5

SOCKS5 Proxy.

- socks5h

SOCKS5 Proxy. Proxy resolves URL hostname.

export BELLE2_CONDB_PROXY="http://192.168.178.1:8081"

If it is not set the default proxy configuration is used (e.g. honor

$http_proxy). If it is set to an empty value direct connection is used.

5.4.3. Offline Mode#

Sometimes you might need to run the software without internet access, for example when trying to develop on a plane or somewhere else where internet is spotty and unreliable.

To do that you need to download all the information on the payloads and the payload files themselves before running the software:

Find out which globaltags you need. If you just want to run generation/simulation you only might need the default globaltag. To see which one that is you can run

basf2 --info

if you want to run over some existing files you might want to check what globaltags are stored in the file information using

b2file-metadata-show --all inputfile.root

Download all the necessary information using the b2conditionsdb command line tool. The tool will automatically download all information in a globaltag as well as the payload files and put it all in a sqlite file and the payload files in the same directory

b2conditionsdb download -o /path/where/to/download/metadata.sqlite globaltag1 globaltag2 ...

Tell the software to use only this downloaded information and not try to contact the central server. This can be done in the steering file with the

conditions.metadata_providersandconditions.payload_locationssettings but the easiest is to use environment variablesexport BELLE2_CONDB_METADATA=/path/where/to/download/metadata.sqlite export BELLE2_CONDB_PAYLOADS=/path/where/to/download

5.4.4. Creation of new payloads#

New payloads can be created by calling Belle2::Database::storeData() from

either C++ or python. It takes a TObject* or TClonesArray* pointer to

the payload data and an interval of validity. Optionally, the first argument can

be the name to store the Payload with which usually defaults to the classname of

the object.

#include <framework/database/Database.h>

#include <framework/database/IntervalOfValidity.h>

#include <framework/dbobjects/BeamParameters.h>

std::unique_ptr<BeamParameters> beamParams(new BeamParameters());

Database::Instance().storeData(beamParams.get(), IntervalOfValidity::always());

In python the name of the payload cannot be inferred so it always needs to be specified explicitly

from ROOT import Belle2

beam_params = Belle2.BeamParameters()

iov = Belle2.IntervalOfValidity(0,0,3,-1)

Belle2.Database.Instance().storeData("BeamParameters", beam_params, iov)

By default this will create new payload files in the subdirectory “localdb” relative to the current working directory and also create a text file containing the metadata for the payload files.

These payloads can then be tested by adding the filename of the text file to

conditions.testing_payloads

and once satisfied can be uploaded with b2conditionsdb-upload

or b2conditionsdb-request

Changed in version release-06-00-00.

When new payloads are created via Belle2::Database::storeData the new

payloads will be assigned a revision number consisting of the first few

characters of the checksum of the payload file. This is done for efficient

creation of payload files but also to distuingish locally created payload files

from payloads downloaded from the database.

If a payload has an alphanumeric string similar to a git commit hash as revision number then it was created locally

If a payload has a numeric revision number it was either downloaded from the database or created with older release versions.

In any case the local revision number is not related with the revision number obtained when uploading a payload to the server.