161 {

162

163 Weightfile weightfile;

164 std::string custom_weightfile = weightfile.generateFileName();

165 std::string custom_steeringfile = weightfile.generateFileName();

166

167 uint64_t numberOfFeatures = training_data.getNumberOfFeatures();

168 uint64_t numberOfSpectators = training_data.getNumberOfSpectators();

169 uint64_t numberOfEvents = training_data.getNumberOfEvents();

170

172 B2ERROR("Please provide a positive training fraction");

173 throw std::runtime_error("Please provide a training fraction between (0.0,1.0]");

174 }

175

177 numberOfTrainingEvents = numberOfTrainingEvents / 100 + (numberOfTrainingEvents % 100 != 0);

178 auto numberOfValidationEvents = numberOfEvents - numberOfTrainingEvents;

179

181 if (batch_size == 0) {

182 batch_size = numberOfTrainingEvents;

183 }

184

185 if (batch_size > numberOfTrainingEvents) {

186 B2WARNING("Mini batch size (" << batch_size << ") is larger than the number of training events (" << numberOfTrainingEvents << ")"\

187 " The batch size has been set equal to the number of training events.");

188 batch_size = numberOfTrainingEvents;

189 };

190

191 auto X = std::unique_ptr<float[]>(new float[batch_size * numberOfFeatures]);

192 auto S = std::unique_ptr<float[]>(new float[batch_size * numberOfSpectators]);

193 auto y = std::unique_ptr<float[]>(new float[batch_size]);

194 auto w = std::unique_ptr<float[]>(new float[batch_size]);

195 npy_intp dimensions_X[2] = {static_cast<npy_intp>(batch_size), static_cast<npy_intp>(numberOfFeatures)};

196 npy_intp dimensions_S[2] = {static_cast<npy_intp>(batch_size), static_cast<npy_intp>(numberOfSpectators)};

197 npy_intp dimensions_y[2] = {static_cast<npy_intp>(batch_size), 1};

198 npy_intp dimensions_w[2] = {static_cast<npy_intp>(batch_size), 1};

199

200 auto X_v = std::unique_ptr<float[]>(new float[numberOfValidationEvents * numberOfFeatures]);

201 auto S_v = std::unique_ptr<float[]>(new float[numberOfValidationEvents * numberOfSpectators]);

202 auto y_v = std::unique_ptr<float[]>(new float[numberOfValidationEvents]);

203 auto w_v = std::unique_ptr<float[]>(new float[numberOfValidationEvents]);

204 npy_intp dimensions_X_v[2] = {static_cast<npy_intp>(numberOfValidationEvents), static_cast<npy_intp>(numberOfFeatures)};

205 npy_intp dimensions_S_v[2] = {static_cast<npy_intp>(numberOfValidationEvents), static_cast<npy_intp>(numberOfSpectators)};

206 npy_intp dimensions_y_v[2] = {static_cast<npy_intp>(numberOfValidationEvents), 1};

207 npy_intp dimensions_w_v[2] = {static_cast<npy_intp>(numberOfValidationEvents), 1};

208

209 std::string steering_file_source_code;

212 std::ifstream steering_file(filename);

213 if (not steering_file) {

214 throw std::runtime_error(std::string("Couldn't open file ") + filename);

215 }

216 steering_file.seekg(0, std::ios::end);

217 steering_file_source_code.resize(steering_file.tellg());

218 steering_file.seekg(0, std::ios::beg);

219 steering_file.read(&steering_file_source_code[0], steering_file_source_code.size());

220 }

221

222 std::vector<float> means(numberOfFeatures, 0.0);

223 std::vector<float> stds(numberOfFeatures, 0.0);

224

226

227

228 auto weights = training_data.getWeights();

229 for (uint64_t iFeature = 0; iFeature < numberOfFeatures; ++iFeature) {

230 double wSum = 0.0;

231 double mean = 0.0;

232 double running_std = 0.0;

233 auto feature = training_data.getFeature(iFeature);

234 for (uint64_t i = 0; i < weights.size(); ++i) {

235 wSum += weights[i];

236 double meanOld = mean;

237 mean += (weights[i] / wSum) * (feature[i] - meanOld);

238 running_std += weights[i] * (feature[i] - meanOld) * (feature[i] - mean);

239 }

240 means[iFeature] = mean;

241 stds[iFeature] = std::sqrt(running_std / (wSum - 1));

242 }

243 }

244

245 try {

246

247 auto json = boost::python::import("json");

248 auto builtins = boost::python::import("builtins");

249 auto inspect = boost::python::import("inspect");

250

251

253

254 builtins.attr("exec")(steering_file_source_code.c_str(), boost::python::object(framework.attr("__dict__")));

255

256

258 auto model = framework.attr("get_model")(numberOfFeatures, numberOfSpectators,

260

261

262 for (uint64_t iEvent = 0; iEvent < numberOfValidationEvents; ++iEvent) {

263 training_data.loadEvent(iEvent);

265 for (uint64_t iFeature = 0; iFeature < numberOfFeatures; ++iFeature)

266 X_v[iEvent * numberOfFeatures + iFeature] = (training_data.m_input[iFeature] - means[iFeature]) / stds[iFeature];

267 } else {

268 for (uint64_t iFeature = 0; iFeature < numberOfFeatures; ++iFeature)

269 X_v[iEvent * numberOfFeatures + iFeature] = training_data.m_input[iFeature];

270 }

271 for (uint64_t iSpectator = 0; iSpectator < numberOfSpectators; ++iSpectator)

272 S_v[iEvent * numberOfSpectators + iSpectator] = training_data.m_spectators[iSpectator];

273 y_v[iEvent] = training_data.m_target;

274 w_v[iEvent] = training_data.m_weight;

275 }

276

277 auto ndarray_X_v = boost::python::handle<>(PyArray_SimpleNewFromData(2, dimensions_X_v, NPY_FLOAT32, X_v.get()));

278 auto ndarray_S_v = boost::python::handle<>(PyArray_SimpleNewFromData(2, dimensions_S_v, NPY_FLOAT32, S_v.get()));

279 auto ndarray_y_v = boost::python::handle<>(PyArray_SimpleNewFromData(2, dimensions_y_v, NPY_FLOAT32, y_v.get()));

280 auto ndarray_w_v = boost::python::handle<>(PyArray_SimpleNewFromData(2, dimensions_w_v, NPY_FLOAT32, w_v.get()));

281

282 uint64_t nBatches = std::floor(numberOfTrainingEvents / batch_size);

283

284 auto state = framework.attr("begin_fit")(model, ndarray_X_v, ndarray_S_v, ndarray_y_v, ndarray_w_v, nBatches);

285

286 bool continue_loop = true;

287

288 std::vector<uint64_t> iteration_index_vector(numberOfTrainingEvents);

289 std::iota(std::begin(iteration_index_vector), std::end(iteration_index_vector), 0);

290

292 and continue_loop; ++iIteration) {

293

294

295 if (iIteration > 0) std::shuffle(std::begin(iteration_index_vector), std::end(iteration_index_vector), TRandomWrapper());

296

297 for (uint64_t iBatch = 0; iBatch < nBatches and continue_loop; ++iBatch) {

298

299

300

301 PyThreadState* m_thread_state = PyEval_SaveThread();

302 for (uint64_t iEvent = 0; iEvent < batch_size; ++iEvent) {

303 training_data.loadEvent(iteration_index_vector.at(iEvent + iBatch * batch_size) + numberOfValidationEvents);

305 for (uint64_t iFeature = 0; iFeature < numberOfFeatures; ++iFeature)

306 X[iEvent * numberOfFeatures + iFeature] = (training_data.m_input[iFeature] - means[iFeature]) / stds[iFeature];

307 } else {

308 for (uint64_t iFeature = 0; iFeature < numberOfFeatures; ++iFeature)

309 X[iEvent * numberOfFeatures + iFeature] = training_data.m_input[iFeature];

310 }

311 for (uint64_t iSpectator = 0; iSpectator < numberOfSpectators; ++iSpectator)

312 S[iEvent * numberOfSpectators + iSpectator] = training_data.m_spectators[iSpectator];

313 y[iEvent] = training_data.m_target;

314 w[iEvent] = training_data.m_weight;

315 }

316

317

318 auto ndarray_X = boost::python::handle<>(PyArray_SimpleNewFromData(2, dimensions_X, NPY_FLOAT32, X.get()));

319 auto ndarray_S = boost::python::handle<>(PyArray_SimpleNewFromData(2, dimensions_S, NPY_FLOAT32, S.get()));

320 auto ndarray_y = boost::python::handle<>(PyArray_SimpleNewFromData(2, dimensions_y, NPY_FLOAT32, y.get()));

321 auto ndarray_w = boost::python::handle<>(PyArray_SimpleNewFromData(2, dimensions_w, NPY_FLOAT32, w.get()));

322

323

324 PyEval_RestoreThread(m_thread_state);

325 auto r = framework.attr("partial_fit")(state, ndarray_X, ndarray_S, ndarray_y,

326 ndarray_w, iIteration, iBatch);

327 boost::python::extract<bool> proxy(r);

328 if (proxy.check())

329 continue_loop = static_cast<bool>(proxy);

330 }

331 }

332

333 auto result = framework.attr("end_fit")(state);

334

335 auto pickle = boost::python::import("pickle");

336 auto file = builtins.attr("open")(custom_weightfile.c_str(), "wb");

337 pickle.attr("dump")(result, file);

338

339 auto steeringfile = builtins.attr("open")(custom_steeringfile.c_str(), "wb");

340 pickle.attr("dump")(steering_file_source_code.c_str(), steeringfile);

341

342 auto importances = framework.attr("feature_importance")(state);

343 if (len(importances) == 0) {

344 B2INFO("Python method returned empty feature importance. There won't be any information about the feature importance in the weightfile.");

345 } else if (numberOfFeatures != static_cast<uint64_t>(len(importances))) {

346 B2WARNING("Python method didn't return the correct number of importance value. I ignore the importances");

347 } else {

348 std::map<std::string, float> feature_importances;

349 for (uint64_t iFeature = 0; iFeature < numberOfFeatures; ++iFeature) {

350 boost::python::extract<float> proxy(importances[iFeature]);

351 if (proxy.check()) {

353 } else {

354 B2WARNING("Failed to convert importance output of the method to a float, using 0 instead");

356 }

357 }

358 weightfile.addFeatureImportance(feature_importances);

359 }

360

361 } catch (...) {

362 PyErr_Print();

363 PyErr_Clear();

364 B2ERROR("Failed calling train in PythonTeacher");

365 throw std::runtime_error(std::string("Failed calling train in PythonTeacher"));

366 }

367

370 weightfile.addFile("Python_Weightfile", custom_weightfile);

371 weightfile.addFile("Python_Steeringfile", custom_steeringfile);

372 weightfile.addSignalFraction(training_data.getSignalFraction());

374 weightfile.addVector("Python_Means", means);

375 weightfile.addVector("Python_Stds", stds);

376 }

377

378 return weightfile;

379

380 }

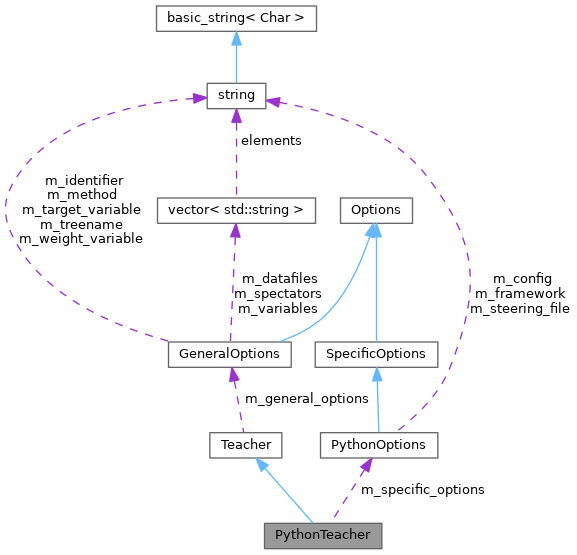

static std::string findFile(const std::string &path, bool silent=false)

Search for given file or directory in local or central release directory, and return absolute path if...

std::vector< std::string > m_variables

Vector of all variables (branch names) used in the training.

unsigned int m_nIterations

Number of iterations through the whole data.

std::string m_steering_file

steering file provided by the user to override the functions in the framework

std::string m_framework

framework to use e.g.

std::string m_config

Config string in json, which is passed to the get model function.

bool m_normalize

Normalize the inputs (shift mean to zero and std to 1)

double m_training_fraction

Fraction of data passed as training data, rest is passed as test data.

unsigned int m_mini_batch_size

Mini batch size, 0 passes the whole data in one call.

GeneralOptions m_general_options

GeneralOptions containing all shared options.