|

Belle II Software light-2411-aldebaran

|

|

Belle II Software light-2411-aldebaran

|

Public Member Functions | |

| def | __init__ (self, str listname, List[str] variables, str filename, Optional[str] hdf_table_name=None, int event_buffer_size=100, **writer_kwargs) |

| def | initialize (self) |

| def | initialize_feather_writer (self) |

| def | initialize_parquet_writer (self) |

| def | initialize_csv_writer (self) |

| def | initialize_hdf5_writer (self) |

| def | fill_event_buffer (self) |

| def | fill_buffer (self) |

| def | write_buffer (self, buf) |

| def | event (self) |

| def | terminate (self) |

Protected Attributes | |

| _filename | |

| Output filename. | |

| _listname | |

| Particle list name. | |

| _variables | |

| List of variables. | |

| _format | |

| Output format. | |

| _table_name | |

| Table name in the hdf5 file. | |

| _event_buffer_size | |

| Event buffer size. | |

| _buffer | |

| Event buffer. | |

| _event_buffer_counter | |

| Event buffer counter. | |

| _writer_kwargs | |

| writer kwargs | |

| _varnames | |

| variable names | |

| _var_objects | |

| variable objects for each variable | |

| _evtmeta | |

| Event metadata. | |

| _plist | |

| Pointer to the particle list. | |

| _dtypes | |

| The data type. | |

| _schema | |

| A list of tuples and py.DataTypes to define the pyarrow schema. | |

| _feather_writer | |

| a writer object to write data into a feather file | |

| _parquet_writer | |

| a writer object to write data into a parquet file | |

| _csv_writer | |

| a writer object to write data into a csv file | |

| _hdf5_writer | |

| The pytable file. | |

| _table | |

| The pytable. | |

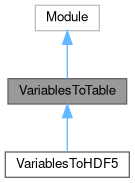

Base class to dump ntuples into a non root format of your choosing

Definition at line 38 of file b2pandas_utils.py.

| def __init__ | ( | self, | |

| str | listname, | ||

| List[str] | variables, | ||

| str | filename, | ||

| Optional[str] | hdf_table_name = None, |

||

| int | event_buffer_size = 100, |

||

| ** | writer_kwargs | ||

| ) |

Constructor to initialize the internal state

Arguments:

listname(str): name of the particle list

variables(list(str)): list of variables to save for each particle

filename(str): name of the output file to be created.

Needs to end with `.csv` for csv output, `.parquet` or `.pq` for parquet output,

`.h5`, `.hdf` or `.hdf5` for hdf5 output and `.feather` or `.arrow` for feather output

hdf_table_name(str): name of the table in the hdf5 file.

If not provided, it will be the same as the listname

event_buffer_size(int): number of events to buffer before writing to disk,

higher values will use more memory but write faster and result in smaller files

**writer_kwargs: additional keyword arguments to pass to the writer.

For details, see the documentation of the writer in the apache arrow documentation.

Only use, if you know what you are doing!

Reimplemented in VariablesToHDF5.

Definition at line 43 of file b2pandas_utils.py.

| def event | ( | self | ) |

Event processing function executes the fill_buffer function and writes the data to the output file

Definition at line 264 of file b2pandas_utils.py.

| def fill_buffer | ( | self | ) |

fill a buffer over multiple events and return it, when self.

Definition at line 231 of file b2pandas_utils.py.

| def fill_event_buffer | ( | self | ) |

collect all variables for the particle in a numpy array

Definition at line 208 of file b2pandas_utils.py.

| def initialize | ( | self | ) |

Create the hdf5 file and list of variable objects to be used during event processing.

Definition at line 103 of file b2pandas_utils.py.

| def initialize_csv_writer | ( | self | ) |

Initialize the csv writer using pyarrow

Definition at line 178 of file b2pandas_utils.py.

| def initialize_feather_writer | ( | self | ) |

Initialize the feather writer using pyarrow

Definition at line 150 of file b2pandas_utils.py.

| def initialize_hdf5_writer | ( | self | ) |

Initialize the hdf5 writer using pytables

Definition at line 189 of file b2pandas_utils.py.

| def initialize_parquet_writer | ( | self | ) |

Initialize the parquet writer using pyarrow

Definition at line 165 of file b2pandas_utils.py.

| def terminate | ( | self | ) |

save and close the output

Definition at line 274 of file b2pandas_utils.py.

| def write_buffer | ( | self, | |

| buf | |||

| ) |

write the buffer to the output file

Definition at line 246 of file b2pandas_utils.py.

|

protected |

Event buffer.

Definition at line 97 of file b2pandas_utils.py.

|

protected |

a writer object to write data into a csv file

Definition at line 187 of file b2pandas_utils.py.

|

protected |

The data type.

Definition at line 139 of file b2pandas_utils.py.

|

protected |

Event buffer counter.

Definition at line 99 of file b2pandas_utils.py.

|

protected |

Event buffer size.

Definition at line 95 of file b2pandas_utils.py.

|

protected |

Event metadata.

Definition at line 120 of file b2pandas_utils.py.

|

protected |

a writer object to write data into a feather file

Definition at line 159 of file b2pandas_utils.py.

|

protected |

Output filename.

Definition at line 70 of file b2pandas_utils.py.

|

protected |

Output format.

Definition at line 78 of file b2pandas_utils.py.

|

protected |

The pytable file.

Definition at line 194 of file b2pandas_utils.py.

|

protected |

Particle list name.

Definition at line 72 of file b2pandas_utils.py.

|

protected |

a writer object to write data into a parquet file

Definition at line 174 of file b2pandas_utils.py.

|

protected |

Pointer to the particle list.

Definition at line 123 of file b2pandas_utils.py.

|

protected |

A list of tuples and py.DataTypes to define the pyarrow schema.

Definition at line 155 of file b2pandas_utils.py.

|

protected |

The pytable.

Definition at line 204 of file b2pandas_utils.py.

|

protected |

Table name in the hdf5 file.

Definition at line 91 of file b2pandas_utils.py.

|

protected |

variable objects for each variable

Definition at line 117 of file b2pandas_utils.py.

|

protected |

List of variables.

Definition at line 74 of file b2pandas_utils.py.

|

protected |

variable names

Definition at line 110 of file b2pandas_utils.py.

|

protected |

writer kwargs

Definition at line 101 of file b2pandas_utils.py.