Set up input and output names and perform consistency checks.

90{

91 const auto& inputNames =

m_session->getOrtSession().GetInputNames();

92 const auto& outputNames =

m_session->getOrtSession().GetOutputNames();

93

94

95 if (inputNames.size() != 1) {

96 std::stringstream msg;

97 msg << "Model has multiple inputs: ";

98 for (auto name : inputNames)

99 msg << "\"" << name << "\" ";

100 msg << "- only single-input models are supported.";

101 B2FATAL(msg.str());

102 }

104

106

107

108 if (outputNames.size() == 1) {

110 B2INFO("Output name of the model is "

111 << outputNames[0]

112 << " - will use that despite the configured name being \""

114 }

116 return;

117 }

118

119

120

123 }

124 auto outputFound = std::find(outputNames.begin(), outputNames.end(),

126 if (!outputFound) {

127 std::stringstream msg;

128 msg <<

"No output named \"" <<

m_outputName <<

"\" found. Instead got ";

129 for (auto name : outputNames)

130 msg << "\"" << name << "\" ";

131 msg <<

"- either change your model to contain one named \"" <<

m_outputName

132 << "\" or set `m_outputName` in the specific options to one of the available names.";

133 B2FATAL(msg.str());

134 }

135}

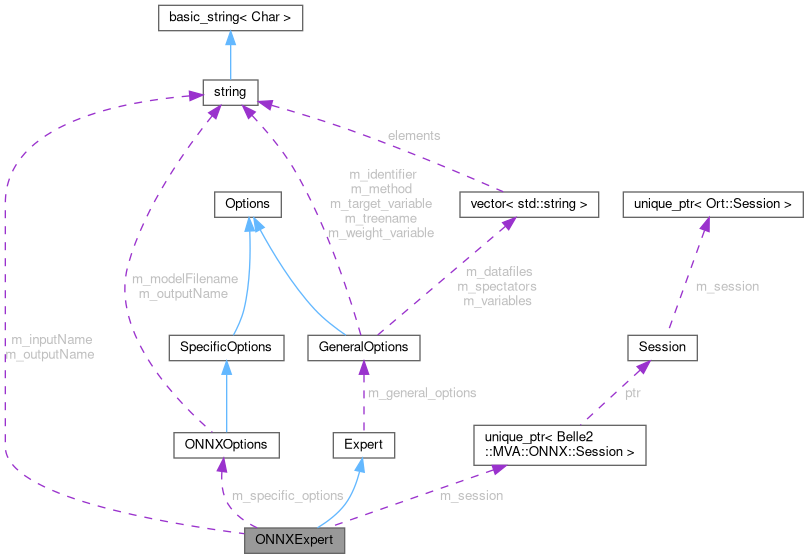

ONNXOptions m_specific_options

ONNX specific options loaded from weightfile.