|

| def | __init__ (self, *backend_args=None) |

| |

| def | can_submit (self, njobs=1) |

| |

| def | bjobs (cls, output_fields=None, job_id="", username="", queue="") |

| |

| def | bqueues (cls, output_fields=None, queues=None) |

| |

| def | can_submit (self, *args, **kwargs) |

| |

| def | submit (self, job, check_can_submit=True, jobs_per_check=100) |

| |

| def | submit (self, job) |

| |

| def | get_batch_submit_script_path (self, job) |

| |

| def | get_submit_script_path (self, job) |

| |

|

| def | _add_batch_directives (self, job, batch_file) |

| |

| def | _create_cmd (self, script_path) |

| |

| def | _submit_to_batch (cls, cmd) |

| |

| def | _create_parent_job_result (cls, parent) |

| |

| def | _create_job_result (cls, job, batch_output) |

| |

| def | _make_submit_file (self, job, submit_file_path) |

| |

| def | _ (self, job, check_can_submit=True, jobs_per_check=100) |

| |

| def | _ (self, job, check_can_submit=True, jobs_per_check=100) |

| |

| def | _ (self, jobs, check_can_submit=True, jobs_per_check=100) |

| |

| def | _add_wrapper_script_setup (self, job, batch_file) |

| |

| def | _add_wrapper_script_teardown (self, job, batch_file) |

| |

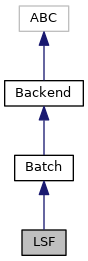

Backend for submitting calibration processes to a qsub batch system.

Definition at line 1583 of file backends.py.

◆ __init__()

| def __init__ |

( |

|

self, |

|

|

* |

backend_args = None |

|

) |

| |

Init method for Batch Backend. Does some basic default setup.

Reimplemented from Batch.

Definition at line 1604 of file backends.py.

◆ _() [1/3]

| def _ |

( |

|

self, |

|

|

|

job, |

|

|

|

check_can_submit = True, |

|

|

|

jobs_per_check = 100 |

|

) |

| |

|

privateinherited |

Submit method of Batch backend for a `SubJob`. Should take `SubJob` object, create needed directories,

create batch script, and send it off with the batch submission command.

It should apply the correct options (default and user requested).

Should set a Result object as an attribute of the job.

Definition at line 1179 of file backends.py.

◆ _() [2/3]

| def _ |

( |

|

self, |

|

|

|

job, |

|

|

|

check_can_submit = True, |

|

|

|

jobs_per_check = 100 |

|

) |

| |

|

privateinherited |

Submit method of Batch backend. Should take job object, create needed directories, create batch script,

and send it off with the batch submission command, applying the correct options (default and user requested.)

Should set a Result object as an attribute of the job.

Definition at line 1215 of file backends.py.

◆ _() [3/3]

| def _ |

( |

|

self, |

|

|

|

jobs, |

|

|

|

check_can_submit = True, |

|

|

|

jobs_per_check = 100 |

|

) |

| |

|

privateinherited |

Submit method of Batch Backend that takes a list of jobs instead of just one and submits each one.

Definition at line 1266 of file backends.py.

◆ _add_batch_directives()

| def _add_batch_directives |

( |

|

self, |

|

|

|

job, |

|

|

|

batch_file |

|

) |

| |

|

private |

Adds LSF BSUB directives for the job to a script.

Reimplemented from Batch.

Definition at line 1607 of file backends.py.

◆ _add_setup()

| def _add_setup |

( |

|

job, |

|

|

|

batch_file |

|

) |

| |

|

staticprivateinherited |

Adds setup lines to the shell script file.

Definition at line 777 of file backends.py.

◆ _add_wrapper_script_setup()

| def _add_wrapper_script_setup |

( |

|

self, |

|

|

|

job, |

|

|

|

batch_file |

|

) |

| |

|

privateinherited |

Adds lines to the submitted script that help with job monitoring/setup. Mostly here so that we can insert

`trap` statements for Ctrl-C situations.

Definition at line 784 of file backends.py.

◆ _add_wrapper_script_teardown()

| def _add_wrapper_script_teardown |

( |

|

self, |

|

|

|

job, |

|

|

|

batch_file |

|

) |

| |

|

privateinherited |

Adds lines to the submitted script that help with job monitoring/teardown. Mostly here so that we can insert

an exit code of the job cmd being written out to a file. Which means that we can know if the command was

successful or not even if the backend server/monitoring database purges the data about our job i.e. If PBS

removes job information too quickly we may never know if a job succeeded or failed without some kind of exit

file.

Definition at line 809 of file backends.py.

◆ _create_cmd()

| def _create_cmd |

( |

|

self, |

|

|

|

script_path |

|

) |

| |

|

private |

◆ _create_job_result()

| def _create_job_result |

( |

|

cls, |

|

|

|

job, |

|

|

|

batch_output |

|

) |

| |

|

private |

◆ _create_parent_job_result()

| def _create_parent_job_result |

( |

|

cls, |

|

|

|

parent |

|

) |

| |

|

private |

We want to be able to call `ready()` on the top level `Job.result`. So this method needs to exist

so that a Job.result object actually exists. It will be mostly empty and simply updates subjob

statuses and allows the use of ready().

Reimplemented from Backend.

Definition at line 1731 of file backends.py.

◆ _make_submit_file()

| def _make_submit_file |

( |

|

self, |

|

|

|

job, |

|

|

|

submit_file_path |

|

) |

| |

|

privateinherited |

Useful for the HTCondor backend where a submit is needed instead of batch

directives pasted directly into the submission script. It should be overwritten

if needed.

Reimplemented in HTCondor.

Definition at line 1147 of file backends.py.

◆ _submit_to_batch()

| def _submit_to_batch |

( |

|

cls, |

|

|

|

cmd |

|

) |

| |

|

private |

Do the actual batch submission command and collect the output to find out the job id for later monitoring.

Reimplemented from Batch.

Definition at line 1631 of file backends.py.

◆ bjobs()

| def bjobs |

( |

|

cls, |

|

|

|

output_fields = None, |

|

|

|

job_id = "", |

|

|

|

username = "", |

|

|

|

queue = "" |

|

) |

| |

Simplistic interface to the `bjobs` command. lets you request information about all jobs matching the filters

'job_id', 'username', and 'queue'. The result is the JSON dictionary returned by output of the ``-json`` bjobs option.

Parameters:

output_fields (list[str]): A list of bjobs -o fields that you would like information about e.g. ['stat', 'name', 'id']

job_id (str): String representation of the Job ID given by bsub during submission If this argument is given then

the output of this function will be only information about this job. If this argument is not given, then all jobs

matching the other filters will be returned.

username (str): By default bjobs (and this function) return information about only the current user's jobs. By giving

a username you can access the job information of a specific user's jobs. By giving ``username='all'`` you will

receive job information from all known user jobs matching the other filters.

queue (str): Set this argument to receive job information about jobs that are in the given queue and no other.

Returns:

dict: JSON dictionary of the form:

.. code-block:: python

{

"NJOBS":<njobs returned by command>,

"JOBS":[

{

<output field: value>, ...

}, ...

]

}

Definition at line 1771 of file backends.py.

◆ bqueues()

| def bqueues |

( |

|

cls, |

|

|

|

output_fields = None, |

|

|

|

queues = None |

|

) |

| |

Simplistic interface to the `bqueues` command. lets you request information about all queues matching the filters.

The result is the JSON dictionary returned by output of the ``-json`` bqueues option.

Parameters:

output_fields (list[str]): A list of bqueues -o fields that you would like information about

e.g. the default is ['queue_name' 'status' 'max' 'njobs' 'pend' 'run']

queues (list[str]): Set this argument to receive information about only the queues that are requested and no others.

By default you will receive information about all queues.

Returns:

dict: JSON dictionary of the form:

.. code-block:: python

{

"COMMAND":"bqueues",

"QUEUES":46,

"RECORDS":[

{

"QUEUE_NAME":"b2_beast",

"STATUS":"Open:Active",

"MAX":"200",

"NJOBS":"0",

"PEND":"0",

"RUN":"0"

}, ...

}

Definition at line 1831 of file backends.py.

◆ can_submit() [1/2]

| def can_submit |

( |

|

self, |

|

|

* |

args, |

|

|

** |

kwargs |

|

) |

| |

|

inherited |

Should be implemented in a derived class to check that submitting the next job(s) shouldn't fail.

This is initially meant to make sure that we don't go over the global limits of jobs (submitted + running).

Returns:

bool: If the job submission can continue based on the current situation.

Definition at line 1161 of file backends.py.

◆ can_submit() [2/2]

| def can_submit |

( |

|

self, |

|

|

|

njobs = 1 |

|

) |

| |

Checks the global number of jobs in LSF right now (submitted or running) for this user.

Returns True if the number is lower that the limit, False if it is higher.

Parameters:

njobs (int): The number of jobs that we want to submit before checking again. Lets us check if we

are sufficiently below the limit in order to (somewhat) safely submit. It is slightly dangerous to

assume that it is safe to submit too many jobs since there might be other processes also submitting jobs.

So njobs really shouldn't be abused when you might be getting close to the limit i.e. keep it <=250

and check again before submitting more.

Definition at line 1747 of file backends.py.

◆ get_batch_submit_script_path()

| def get_batch_submit_script_path |

( |

|

self, |

|

|

|

job |

|

) |

| |

|

inherited |

Construct the Path object of the script file that we will submit using the batch command.

For most batch backends this is the same script as the bash script we submit.

But for some they require a separate submission file that describes the job.

To implement that you can implement this function in the Backend class.

Reimplemented in HTCondor.

Definition at line 1299 of file backends.py.

◆ get_submit_script_path()

| def get_submit_script_path |

( |

|

self, |

|

|

|

job |

|

) |

| |

|

inherited |

Construct the Path object of the bash script file that we will submit. It will contain

the actual job command, wrapper commands, setup commands, and any batch directives

Definition at line 830 of file backends.py.

◆ submit() [1/2]

Base method for submitting collection jobs to the backend type. This MUST be

implemented for a correctly written backend class deriving from Backend().

Reimplemented in Local.

Definition at line 770 of file backends.py.

◆ submit() [2/2]

| def submit |

( |

|

self, |

|

|

|

job, |

|

|

|

check_can_submit = True, |

|

|

|

jobs_per_check = 100 |

|

) |

| |

|

inherited |

◆ global_job_limit

The active job limit.

Init method for Batch Backend. Does some basic default setup.

This is 'global' because we want to prevent us accidentally submitting too many jobs from all current and previous submission scripts.

Definition at line 1134 of file backends.py.

◆ sleep_between_submission_checks

| sleep_between_submission_checks |

|

inherited |

Seconds we wait before checking if we can submit a list of jobs.

Only relevant once we hit the global limit of active jobs, which is a lot usually.

Definition at line 1137 of file backends.py.

The documentation for this class was generated from the following file: