|

Belle II Software

release-06-01-15

|

|

Belle II Software

release-06-01-15

|

Public Member Functions | |

| def | __init__ (self, collector=None, input_files=None, pre_collector_path=None, database_chain=None, output_patterns=None, max_files_per_collector_job=None, max_collector_jobs=None, backend_args=None) |

| def | reset_database (self) |

| def | use_central_database (self, global_tag) |

| def | use_local_database (self, filename, directory="") |

| def | input_files (self) |

| def | input_files (self, value) |

| def | collector (self) |

| def | collector (self, collector) |

| def | is_valid (self) |

| def | max_collector_jobs (self) |

| def | max_collector_jobs (self, value) |

| def | max_files_per_collector_job (self) |

| def | max_files_per_collector_job (self, value) |

Static Public Member Functions | |

| def | uri_list_from_input_file (input_file) |

Public Attributes | |

| collector | |

| Collector module of this collection. | |

| input_files | |

| Internal input_files stored for this calibration. | |

| files_to_iovs | |

File -> Iov dictionary, should be :

: Where iov is a :py:class: | |

| pre_collector_path | |

Since many collectors require some different setup, if you set this attribute to a basf2.Path it will be run before the collector and after the default RootInput module + HistoManager setup. More... | |

| output_patterns | |

Output patterns of files produced by collector which will be used to pass to the Algorithm.data_input function. More... | |

| splitter | |

| The SubjobSplitter to use when constructing collector subjobs from the overall Job object. More... | |

| max_files_per_collector_job | |

| max_collector_jobs | |

| backend_args | |

Dictionary passed to the collector Job object to configure how the caf.backends.Backend instance should treat the collector job when submitting. More... | |

| database_chain | |

| The database chain used for this Collection. More... | |

| job_script | |

| job_cmd | |

The Collector caf.backends.Job.cmd attribute. More... | |

Static Public Attributes | |

| int | default_max_collector_jobs = 1000 |

| The default maximum number of collector jobs to create. More... | |

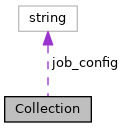

| string | job_config = "collector_job.json" |

| The name of the file containing the collector Job's dictionary. More... | |

Private Attributes | |

| _input_files | |

| _collector | |

| Internal storage of collector attribute. | |

Keyword Arguments:

collector (str, basf2.Module): The collector module or module name for this `Collection`.

input_files (list[str]): The input files to be used for only this `Collection`.

pre_collection_path (basf2.Path): The reconstruction `basf2.Path` to be run prior to the Collector module.

database_chain (list[CentralDatabase, LocalDatabase]): The database chain to be used initially for this `Collection`.

output_patterns (list[str]): Output patterns of files produced by collector which will be used to pass to the

`Algorithm.data_input` function. Setting this here, replaces the default completely.

max_files_for_collector_job (int): Maximum number of input files sent to each collector subjob for this `Collection`.

Technically this sets the SubjobSplitter to be used, not compatible with max_collector_jobs.

max_collector_jobs (int): Maximum number of collector subjobs for this `Collection`.

Input files are split evenly between them. Technically this sets the SubjobSplitter to be used. Not compatible with

max_files_for_collector_job.

backend_args (dict): The args for the backend submission of this `Collection`.

Definition at line 52 of file framework.py.

| def collector | ( | self | ) |

Definition at line 242 of file framework.py.

| def collector | ( | self, | |

| collector | |||

| ) |

Definition at line 248 of file framework.py.

| def reset_database | ( | self | ) |

Remove everything in the database_chain of this Calibration, including the default central database tag automatically included from `basf2.conditions.default_globaltags <ConditionsConfiguration.default_globaltags>`.

Definition at line 151 of file framework.py.

|

static |

Parameters:

input_file (str): A local file/glob pattern or XROOTD URI

Returns:

list: A list of the URIs found from the initial string.

Definition at line 204 of file framework.py.

| def use_central_database | ( | self, | |

| global_tag | |||

| ) |

Parameters:

global_tag (str): The central database global tag to use for this calibration.

Using this allows you to add a central database to the head of the global tag database chain for this collection.

The default database chain is just the central one from

`basf2.conditions.default_globaltags <ConditionsConfiguration.default_globaltags>`.

The input file global tag will always be overrided and never used unless explicitly set.

To turn off central database completely or use a custom tag as the base, you should call `Calibration.reset_database`

and start adding databases with `Calibration.use_local_database` and `Calibration.use_central_database`.

Alternatively you could set an empty list as the input database_chain when adding the Collection to the Calibration.

NOTE!! Since ``release-04-00-00`` the behaviour of basf2 conditions databases has changed.

All local database files MUST now be at the head of the 'chain', with all central database global tags in their own

list which will be checked after all local database files have been checked.

So even if you ask for ``["global_tag1", "localdb/database.txt", "global_tag2"]`` to be the database chain, the real order

that basf2 will use them is ``["global_tag1", "global_tag2", "localdb/database.txt"]`` where the file is checked first.

Definition at line 158 of file framework.py.

| def use_local_database | ( | self, | |

| filename, | |||

directory = "" |

|||

| ) |

Parameters:

filename (str): The path to the database.txt of the local database

directory (str): The path to the payloads directory for this local database.

Append a local database to the chain for this collection.

You can call this function multiple times and each database will be added to the chain IN ORDER.

The databases are applied to this collection ONLY.

NOTE!! Since release-04-00-00 the behaviour of basf2 conditions databases has changed.

All local database files MUST now be at the head of the 'chain', with all central database global tags in their own

list which will be checked after all local database files have been checked.

So even if you ask for ["global_tag1", "localdb/database.txt", "global_tag2"] to be the database chain, the real order

that basf2 will use them is ["global_tag1", "global_tag2", "localdb/database.txt"] where the file is checked first.

Definition at line 183 of file framework.py.

| backend_args |

Dictionary passed to the collector Job object to configure how the caf.backends.Backend instance should treat the collector job when submitting.

The choice of arguments here depends on which backend you plan on using.

Definition at line 127 of file framework.py.

| database_chain |

The database chain used for this Collection.

NOT necessarily the same database chain used for the algorithm step! Since the algorithm will merge the collected data into one process it has to use a single DB chain set from the overall Calibration.

Definition at line 135 of file framework.py.

|

static |

The default maximum number of collector jobs to create.

Only used if max_collector_jobs or max_files_per_collector_job are not set.

Definition at line 70 of file framework.py.

| files_to_iovs |

File -> Iov dictionary, should be :

{absolute_file_path:iov}

: Where iov is a :py:class:IoV <caf.utils.IoV> object.

Will be filled during CAF.run() if empty. To improve performance you can fill this yourself before calling CAF.run()

Definition at line 97 of file framework.py.

| job_cmd |

The Collector caf.backends.Job.cmd attribute.

Probably using the job_script to run basf2.

Definition at line 149 of file framework.py.

|

static |

The name of the file containing the collector Job's dictionary.

Useful for recovery of the job configuration of the ones that ran previously.

Definition at line 73 of file framework.py.

| output_patterns |

Output patterns of files produced by collector which will be used to pass to the Algorithm.data_input function.

You can set these to anything understood by glob.glob, but if you want to specify this you should also specify the Algorithm.data_input function to handle the different types of files and call the CalibrationAlgorithm.setInputFiles() with the correct ones.

Definition at line 109 of file framework.py.

| pre_collector_path |

Since many collectors require some different setup, if you set this attribute to a basf2.Path it will be run before the collector and after the default RootInput module + HistoManager setup.

If this path contains RootInput then it's params are used in the RootInput module instead, except for the input_files parameter which is set to whichever files are passed to the collector subjob.

Definition at line 102 of file framework.py.

| splitter |

The SubjobSplitter to use when constructing collector subjobs from the overall Job object.

If this is not set then your collector will run as one big job with all input files included.

Definition at line 115 of file framework.py.