|

Belle II Software development

|

|

Belle II Software development

|

Public Member Functions | |

| __init__ (self, str listname, List[str] variables, str filename, Optional[str] hdf_table_name=None, int event_buffer_size=100, **writer_kwargs) | |

| initialize (self) | |

| buffer (self) | |

| event_buffer (self) | |

| clear_buffer (self) | |

| append_buffer (self) | |

| initialize_feather_writer (self) | |

| initialize_parquet_writer (self) | |

| initialize_csv_writer (self) | |

| initialize_hdf5_writer (self) | |

| fill_event_buffer (self) | |

| buffer_full (self) | |

| write_buffer (self) | |

| event (self) | |

| terminate (self) | |

Protected Attributes | |

| _filename = filename | |

| Output filename. | |

| _listname = listname | |

| Particle list name. | |

| _variables = list(set(variables)) | |

| List of variables. | |

| str | _format = "csv" |

| Output format. | |

| tuple | _table_name |

| Table name in the hdf5 file. | |

| _event_buffer_size = event_buffer_size | |

| Event buffer size. | |

| int | _event_buffer_counter = 0 |

| Event buffer counter. | |

| _writer_kwargs = writer_kwargs | |

| writer kwargs | |

| list | _varnames |

| variable names | |

| _std_varnames = variables.std_vector(*self._varnames) | |

| std.vector of variable names | |

| _evtmeta = ROOT.Belle2.PyStoreObj("EventMetaData") | |

| Event metadata. | |

| _plist = ROOT.Belle2.PyStoreObj(self._listname) | |

| Pointer to the particle list. | |

| _dtypes = dtypes | |

| The data type. | |

| _buffer = np.empty(self._event_buffer_size * 10, dtype=self._dtypes) | |

| event variables buffer (will be automatically grown if necessary) | |

| int | _buffer_index = 0 |

| current start index in the event variables buffer | |

| list | _schema |

| A list of tuples and py.DataTypes to define the pyarrow schema. | |

| _feather_writer | |

| a writer object to write data into a feather file | |

| _parquet_writer | |

| A list of tuples and py.DataTypes to define the pyarrow schema. | |

| _csv_writer = CSVWriter(self._filename, schema=pa.schema(self._schema), **self._writer_kwargs) | |

| A list of tuples and py.DataTypes to define the pyarrow schema. | |

| _hdf5_writer | |

| The pytable file. | |

| _table | |

| The pytable. | |

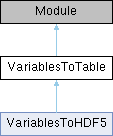

Base class to dump ntuples into a non root format of your choosing

Arguments:

listname(str): name of the particle list

variables(list[str]): list of variables to save for each particle

filename(str): name of the output file to be created.

Needs to end with ``.csv`` for csv output, ``.parquet`` or ``.pq`` for parquet output,

``.h5``, ``.hdf`` or ``.hdf5`` for hdf5 output and ``.feather`` or ``.arrow`` for feather output

hdf_table_name(str): name of the table in the hdf5 file.

If not provided, it will be the same as the listname. Defaults to None.

event_buffer_size(int): number of events to buffer before writing to disk,

higher values will use more memory but result in smaller files.

For some formats, like parquet, this also sets the row group size. Defaults to 100.

**writer_kwargs: additional keyword arguments to pass to the writer.

For details, see the documentation of the respective writer in the apache arrow documentation.

For HDF5, these are passed to ``tables.File.create_table``.

Only use, if you know what you are doing!

Definition at line 38 of file b2pandas_utils.py.

| __init__ | ( | self, | |

| str | listname, | ||

| List[str] | variables, | ||

| str | filename, | ||

| Optional[str] | hdf_table_name = None, | ||

| int | event_buffer_size = 100, | ||

| ** | writer_kwargs ) |

Constructor to initialize the internal state

Definition at line 59 of file b2pandas_utils.py.

| append_buffer | ( | self | ) |

"Append" a new event to the buffer by moving the buffer index forward by particle list size Automatically replaces the buffer by a larger one if necessary

Definition at line 180 of file b2pandas_utils.py.

| buffer | ( | self | ) |

The buffer slice across multiple entries

Definition at line 160 of file b2pandas_utils.py.

| buffer_full | ( | self | ) |

check if the buffer is full

Definition at line 277 of file b2pandas_utils.py.

| clear_buffer | ( | self | ) |

Reset the buffer event counter and index

Definition at line 173 of file b2pandas_utils.py.

| event | ( | self | ) |

Event processing function executes the fill_buffer function and writes the data to the output file in chunks of event_buffer_size

Definition at line 302 of file b2pandas_utils.py.

| event_buffer | ( | self | ) |

The buffer slice for the current event

Definition at line 167 of file b2pandas_utils.py.

| fill_event_buffer | ( | self | ) |

Assign values for all variables for all particles in the particle list to the current event buffer

Definition at line 256 of file b2pandas_utils.py.

| initialize | ( | self | ) |

Setup variable lists, pointers, buffers and file writers

Definition at line 103 of file b2pandas_utils.py.

| initialize_csv_writer | ( | self | ) |

Initialize the csv writer using pyarrow

Definition at line 226 of file b2pandas_utils.py.

| initialize_feather_writer | ( | self | ) |

Initialize the feather writer using pyarrow

Definition at line 198 of file b2pandas_utils.py.

| initialize_hdf5_writer | ( | self | ) |

Initialize the hdf5 writer using pytables

Definition at line 237 of file b2pandas_utils.py.

| initialize_parquet_writer | ( | self | ) |

Initialize the parquet writer using pyarrow

Definition at line 213 of file b2pandas_utils.py.

| terminate | ( | self | ) |

save and close the output

Definition at line 317 of file b2pandas_utils.py.

| write_buffer | ( | self | ) |

write the buffer to the output file

Definition at line 283 of file b2pandas_utils.py.

|

protected |

event variables buffer (will be automatically grown if necessary)

Definition at line 145 of file b2pandas_utils.py.

|

protected |

current start index in the event variables buffer

Definition at line 148 of file b2pandas_utils.py.

|

protected |

A list of tuples and py.DataTypes to define the pyarrow schema.

a writer object to write data into a csv file

Definition at line 235 of file b2pandas_utils.py.

|

protected |

The data type.

Definition at line 142 of file b2pandas_utils.py.

|

protected |

Event buffer counter.

Definition at line 99 of file b2pandas_utils.py.

|

protected |

Event buffer size.

Definition at line 97 of file b2pandas_utils.py.

|

protected |

Event metadata.

Definition at line 122 of file b2pandas_utils.py.

|

protected |

a writer object to write data into a feather file

Definition at line 207 of file b2pandas_utils.py.

|

protected |

Output filename.

Definition at line 71 of file b2pandas_utils.py.

|

protected |

Output format.

Definition at line 80 of file b2pandas_utils.py.

|

protected |

The pytable file.

Definition at line 242 of file b2pandas_utils.py.

|

protected |

Particle list name.

Definition at line 73 of file b2pandas_utils.py.

|

protected |

A list of tuples and py.DataTypes to define the pyarrow schema.

a writer object to write data into a parquet file

Definition at line 222 of file b2pandas_utils.py.

|

protected |

Pointer to the particle list.

Definition at line 126 of file b2pandas_utils.py.

|

protected |

A list of tuples and py.DataTypes to define the pyarrow schema.

Definition at line 203 of file b2pandas_utils.py.

|

protected |

std.vector of variable names

Definition at line 119 of file b2pandas_utils.py.

|

protected |

The pytable.

Definition at line 252 of file b2pandas_utils.py.

|

protected |

Table name in the hdf5 file.

Definition at line 93 of file b2pandas_utils.py.

|

protected |

List of variables.

Definition at line 75 of file b2pandas_utils.py.

|

protected |

variable names

Definition at line 111 of file b2pandas_utils.py.

|

protected |

writer kwargs

Definition at line 101 of file b2pandas_utils.py.