|

Belle II Software development

|

|

Belle II Software development

|

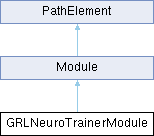

The trainer module for the neural networks of the CDC trigger. More...

#include <GRLNeuroTrainerModule.h>

Public Types | |

| enum | EModulePropFlags { c_Input = 1 , c_Output = 2 , c_ParallelProcessingCertified = 4 , c_HistogramManager = 8 , c_InternalSerializer = 16 , c_TerminateInAllProcesses = 32 , c_DontCollectStatistics = 64 } |

| Each module can be tagged with property flags, which indicate certain features of the module. More... | |

| typedef ModuleCondition::EAfterConditionPath | EAfterConditionPath |

| Forward the EAfterConditionPath definition from the ModuleCondition. | |

Public Member Functions | |

| GRLNeuroTrainerModule () | |

| Constructor, for setting module description and parameters. | |

| virtual | ~GRLNeuroTrainerModule () |

| Destructor. | |

| virtual void | initialize () override |

| Initialize the module. | |

| virtual void | event () override |

| Called once for each event. | |

| virtual void | terminate () override |

| Do the training for all sectors. | |

| void | updateRelevantID (unsigned isector) |

| calculate and set the relevant id range for given sector based on hit counters of the track segments. | |

| void | train (unsigned isector) |

| Train a single MLP. | |

| void | saveTraindata (const std::string &filename, const std::string &arrayname="trainSets") |

| Save all training samples. | |

| bool | loadTraindata (const std::string &filename, const std::string &arrayname="trainSets") |

| Load saved training samples. | |

| virtual std::vector< std::string > | getFileNames (bool outputFiles) |

| Return a list of output filenames for this modules. | |

| virtual void | beginRun () |

| Called when entering a new run. | |

| virtual void | endRun () |

| This method is called if the current run ends. | |

| const std::string & | getName () const |

| Returns the name of the module. | |

| const std::string & | getType () const |

| Returns the type of the module (i.e. | |

| const std::string & | getPackage () const |

| Returns the package this module is in. | |

| const std::string & | getDescription () const |

| Returns the description of the module. | |

| void | setName (const std::string &name) |

| Set the name of the module. | |

| void | setPropertyFlags (unsigned int propertyFlags) |

| Sets the flags for the module properties. | |

| LogConfig & | getLogConfig () |

| Returns the log system configuration. | |

| void | setLogConfig (const LogConfig &logConfig) |

| Set the log system configuration. | |

| void | setLogLevel (int logLevel) |

| Configure the log level. | |

| void | setDebugLevel (int debugLevel) |

| Configure the debug messaging level. | |

| void | setAbortLevel (int abortLevel) |

| Configure the abort log level. | |

| void | setLogInfo (int logLevel, unsigned int logInfo) |

| Configure the printed log information for the given level. | |

| void | if_value (const std::string &expression, const std::shared_ptr< Path > &path, EAfterConditionPath afterConditionPath=EAfterConditionPath::c_End) |

| Add a condition to the module. | |

| void | if_false (const std::shared_ptr< Path > &path, EAfterConditionPath afterConditionPath=EAfterConditionPath::c_End) |

| A simplified version to add a condition to the module. | |

| void | if_true (const std::shared_ptr< Path > &path, EAfterConditionPath afterConditionPath=EAfterConditionPath::c_End) |

| A simplified version to set the condition of the module. | |

| bool | hasCondition () const |

| Returns true if at least one condition was set for the module. | |

| const ModuleCondition * | getCondition () const |

| Return a pointer to the first condition (or nullptr, if none was set) | |

| const std::vector< ModuleCondition > & | getAllConditions () const |

| Return all set conditions for this module. | |

| bool | evalCondition () const |

| If at least one condition was set, it is evaluated and true returned if at least one condition returns true. | |

| std::shared_ptr< Path > | getConditionPath () const |

| Returns the path of the last true condition (if there is at least one, else reaturn a null pointer). | |

| Module::EAfterConditionPath | getAfterConditionPath () const |

| What to do after the conditional path is finished. | |

| std::vector< std::shared_ptr< Path > > | getAllConditionPaths () const |

| Return all condition paths currently set (no matter if the condition is true or not). | |

| bool | hasProperties (unsigned int propertyFlags) const |

| Returns true if all specified property flags are available in this module. | |

| bool | hasUnsetForcedParams () const |

| Returns true and prints error message if the module has unset parameters which the user has to set in the steering file. | |

| const ModuleParamList & | getParamList () const |

| Return module param list. | |

| template<typename T> | |

| ModuleParam< T > & | getParam (const std::string &name) const |

| Returns a reference to a parameter. | |

| bool | hasReturnValue () const |

| Return true if this module has a valid return value set. | |

| int | getReturnValue () const |

| Return the return value set by this module. | |

| std::shared_ptr< PathElement > | clone () const override |

| Create an independent copy of this module. | |

| std::shared_ptr< boost::python::list > | getParamInfoListPython () const |

| Returns a python list of all parameters. | |

Static Public Member Functions | |

| static void | exposePythonAPI () |

| Exposes methods of the Module class to Python. | |

Protected Member Functions | |

| virtual void | def_initialize () |

| Wrappers to make the methods without "def_" prefix callable from Python. | |

| virtual void | def_beginRun () |

| Wrapper method for the virtual function beginRun() that has the implementation to be used in a call from Python. | |

| virtual void | def_event () |

| Wrapper method for the virtual function event() that has the implementation to be used in a call from Python. | |

| virtual void | def_endRun () |

| This method can receive that the current run ends as a call from the Python side. | |

| virtual void | def_terminate () |

| Wrapper method for the virtual function terminate() that has the implementation to be used in a call from Python. | |

| void | setDescription (const std::string &description) |

| Sets the description of the module. | |

| void | setType (const std::string &type) |

| Set the module type. | |

| template<typename T> | |

| void | addParam (const std::string &name, T ¶mVariable, const std::string &description, const T &defaultValue) |

| Adds a new parameter to the module. | |

| template<typename T> | |

| void | addParam (const std::string &name, T ¶mVariable, const std::string &description) |

| Adds a new enforced parameter to the module. | |

| void | setReturnValue (int value) |

| Sets the return value for this module as integer. | |

| void | setReturnValue (bool value) |

| Sets the return value for this module as bool. | |

| void | setParamList (const ModuleParamList ¶ms) |

| Replace existing parameter list. | |

Protected Attributes | |

| std::string | m_TrgECLClusterName |

| Name of the StoreArray containing the ECL clusters. | |

| std::string | m_2DfinderCollectionName |

| Name of the StoreArray containing the input 2D tracks. | |

| std::string | m_GRLCollectionName |

| Name of the StoreObj containing the input GRL. | |

| std::string | m_filename |

| Name of file where network weights etc. | |

| std::string | m_trainFilename |

| Name of file where training samples are stored. | |

| std::string | m_logFilename |

| Name of file where training log is stored. | |

| std::string | m_arrayname |

| Name of the TObjArray holding the networks. | |

| std::string | m_trainArrayname |

| Name of the TObjArray holding the training samples. | |

| bool | m_saveDebug |

| If true, save training curve and parameter distribution of training data. | |

| bool | m_load |

| Switch to load saved parameters from a previous run. | |

| GRLNeuro::Parameters | m_parameters |

| Parameters for the NeuroTrigger. | |

| double | m_nTrainMin |

| Minimal number of training samples. | |

| double | m_nTrainMax |

| Maximal number of training samples. | |

| bool | m_multiplyNTrain |

| Switch to multiply number of samples with number of weights. | |

| int | m_nValid |

| Number of validation samples. | |

| int | m_nTest |

| Number of test samples. | |

| double | m_wMax |

| Limit for weights. | |

| int | m_nThreads |

| Number of threads for training. | |

| int | m_checkInterval |

| Training is stopped if validation error is higher than checkInterval epochs ago, i.e. | |

| int | m_maxEpochs |

| Maximal number of training epochs. | |

| int | m_repeatTrain |

| Number of training runs with different random start weights. | |

| GRLNeuro | m_GRLNeuro |

| Instance of the NeuroTrigger. | |

| std::vector< GRLMLPData > | m_trainSets |

| Sets of training data for all sectors. | |

| std::vector< int > | TCThetaID |

| std::vector< float > | TCPhiLab |

| std::vector< float > | TCcotThetaLab |

| std::vector< float > | TCPhiCOM |

| std::vector< float > | TCThetaCOM |

| std::vector< float > | TC1GeV |

| double | radtodeg = 0 |

| convert radian to degree | |

| int | n_cdc_sector = 0 |

| Number of CDC sectors. | |

| int | n_ecl_sector = 0 |

| Number of ECL sectors. | |

| int | n_sector = 0 |

| Number of Total sectors. | |

| std::vector< TH1D * > | h_cdc2d_phi_sig |

| Histograms for monitoring. | |

| std::vector< TH1D * > | h_cdc2d_pt_sig |

| std::vector< TH1D * > | h_selE_sig |

| std::vector< TH1D * > | h_selPhi_sig |

| std::vector< TH1D * > | h_selTheta_sig |

| std::vector< TH1D * > | h_result_sig |

| std::vector< TH1D * > | h_cdc2d_phi_bg |

| std::vector< TH1D * > | h_cdc2d_pt_bg |

| std::vector< TH1D * > | h_selE_bg |

| std::vector< TH1D * > | h_selPhi_bg |

| std::vector< TH1D * > | h_selTheta_bg |

| std::vector< TH1D * > | h_result_bg |

| std::vector< TH1D * > | h_ncdc_sig |

| std::vector< TH1D * > | h_ncdcf_sig |

| std::vector< TH1D * > | h_ncdcs_sig |

| std::vector< TH1D * > | h_ncdci_sig |

| std::vector< TH1D * > | h_necl_sig |

| std::vector< TH1D * > | h_ncdc_bg |

| std::vector< TH1D * > | h_ncdcf_bg |

| std::vector< TH1D * > | h_ncdcs_bg |

| std::vector< TH1D * > | h_ncdci_bg |

| std::vector< TH1D * > | h_necl_bg |

| std::vector< int > | scale_bg |

| BG scale factor for training. | |

Private Member Functions | |

| std::list< ModulePtr > | getModules () const override |

| no submodules, return empty list | |

| std::string | getPathString () const override |

| return the module name. | |

| void | setParamPython (const std::string &name, const boost::python::object &pyObj) |

| Implements a method for setting boost::python objects. | |

| void | setParamPythonDict (const boost::python::dict &dictionary) |

| Implements a method for reading the parameter values from a boost::python dictionary. | |

Private Attributes | |

| std::string | m_name |

| The name of the module, saved as a string (user-modifiable) | |

| std::string | m_type |

| The type of the module, saved as a string. | |

| std::string | m_package |

| Package this module is found in (may be empty). | |

| std::string | m_description |

| The description of the module. | |

| unsigned int | m_propertyFlags |

| The properties of the module as bitwise or (with |) of EModulePropFlags. | |

| LogConfig | m_logConfig |

| The log system configuration of the module. | |

| ModuleParamList | m_moduleParamList |

| List storing and managing all parameter of the module. | |

| bool | m_hasReturnValue |

| True, if the return value is set. | |

| int | m_returnValue |

| The return value. | |

| std::vector< ModuleCondition > | m_conditions |

| Module condition, only non-null if set. | |

The trainer module for the neural networks of the CDC trigger.

Prepare training data for several neural networks and train them using the Fast Artificial Neural Network library (FANN). For documentation of FANN see http://leenissen.dk/fann/wp/

Definition at line 29 of file GRLNeuroTrainerModule.h.

|

inherited |

Forward the EAfterConditionPath definition from the ModuleCondition.

|

inherited |

Each module can be tagged with property flags, which indicate certain features of the module.

| Enumerator | |

|---|---|

| c_Input | This module is an input module (reads data). |

| c_Output | This module is an output module (writes data). |

| c_ParallelProcessingCertified | This module can be run in parallel processing mode safely (All I/O must be done through the data store, in particular, the module must not write any files.) |

| c_HistogramManager | This module is used to manage histograms accumulated by other modules. |

| c_InternalSerializer | This module is an internal serializer/deserializer for parallel processing. |

| c_TerminateInAllProcesses | When using parallel processing, call this module's terminate() function in all processes(). This will also ensure that there is exactly one process (single-core if no parallel modules found) or at least one input, one main and one output process. |

| c_DontCollectStatistics | No statistics is collected for this module. |

Constructor, for setting module description and parameters.

Definition at line 46 of file GRLNeuroTrainerModule.cc.

|

inlinevirtual |

|

inlinevirtualinherited |

Called when entering a new run.

Called at the beginning of each run, the method gives you the chance to change run dependent constants like alignment parameters, etc.

This method can be implemented by subclasses.

Reimplemented in AnalysisPhase1StudyModule, ARICHBackgroundModule, arichBtestModule, ARICHDigitizerModule, ARICHDQMModule, ARICHRateCalModule, ARICHReconstructorModule, AWESOMEBasicModule, B2BIIConvertBeamParamsModule, B2BIIConvertMdstModule, B2BIIFixMdstModule, B2BIIMCParticlesMonitorModule, B2BIIMdstInputModule, BeamabortModule, BeamabortStudyModule, BeamDigitizerModule, BeamBkgGeneratorModule, BeamBkgHitRateMonitorModule, BeamBkgMixerModule, BeamBkgNeutronModule, BeamBkgTagSetterModule, BeamSpotMonitorModule, BelleMCOutputModule, BgoDigitizerModule, BgoModule, BgoStudyModule, BGOverlayInputModule, BKLMAnaModule, BKLMDigitAnalyzerModule, BKLMSimHistogrammerModule, BKLMTrackingModule, CalibrationCollectorModule, CaveModule, CDCCosmicAnalysisModule, CDCCRTestModule, CDCPackerModule, CDCRecoTrackFilterModule, CDCUnpackerModule, CDCDedxDQMModule, CDCDedxValidationModule, cdcDQM7Module, CDCDQMModule, CDCTrigger2DFinderModule, CDCTriggerNDFinderModule, CDCTriggerNeuroDQMModule, CDCTriggerNeuroDQMOnlineModule, CDCTriggerTSFModule, CDCTriggerUnpackerModule, CertifyParallelModule, ChargedPidMVAModule, ChargedPidMVAMulticlassModule, ClawDigitizerModule, ClawModule, ClawStudyModule, ClawsDigitizerModule, CLAWSModule, ClawsStudyModule, Convert2RawDetModule, CosmicsAlignmentValidationModule, CreateFieldMapModule, CsiDigitizer_v2Module, CsIDigitizerModule, CsiModule, CsiStudy_v2Module, CsIStudyModule, CurlTaggerModule, DAQMonitorModule, DAQPerfModule, DataWriterModule, DelayDQMModule, DeSerializerPXDModule, DetectorOccupanciesDQMModule, DosiDigitizerModule, DosiModule, DosiStudyModule, DQMHistAnalysisARICHModule, DQMHistAnalysisCDCDedxModule, DQMHistAnalysisCDCEpicsModule, DQMHistAnalysisCDCMonObjModule, DQMHistAnalysisDAQMonObjModule, DQMHistAnalysisDeltaEpicsMonObjExampleModule, DQMHistAnalysisDeltaTestModule, DQMHistAnalysisECLConnectedRegionsModule, DQMHistAnalysisECLModule, DQMHistAnalysisECLShapersModule, DQMHistAnalysisECLSummaryModule, DQMHistAnalysisEcmsMonObjModule, DQMHistAnalysisEpicsExampleModule, DQMHistAnalysisEpicsOutputModule, DQMHistAnalysisEventT0EfficiencyModule, DQMHistAnalysisEventT0TriggerJitterModule, DQMHistAnalysisExampleFlagsModule, DQMHistAnalysisExampleModule, DQMHistAnalysisHLTModule, DQMHistAnalysisInput2Module, DQMHistAnalysisInputPVSrvModule, DQMHistAnalysisInputRootFileModule, DQMHistAnalysisInputTestModule, DQMHistAnalysisKLM2Module, DQMHistAnalysisKLMModule, DQMHistAnalysisKLMMonObjModule, DQMHistAnalysisMiraBelleModule, DQMHistAnalysisOutputMonObjModule, DQMHistAnalysisOutputRelayMsgModule, DQMHistAnalysisPeakModule, DQMHistAnalysisPhysicsModule, DQMHistAnalysisPXDChargeModule, DQMHistAnalysisPXDCMModule, DQMHistAnalysisPXDDAQModule, DQMHistAnalysisPXDEffModule, DQMHistAnalysisPXDERModule, DQMHistAnalysisPXDFitsModule, DQMHistAnalysisPXDInjectionModule, DQMHistAnalysisPXDReductionModule, DQMHistAnalysisPXDTrackChargeModule, DQMHistAnalysisRooFitExampleModule, DQMHistAnalysisRunNrModule, DQMHistAnalysisSVDClustersOnTrackModule, DQMHistAnalysisSVDDoseModule, DQMHistAnalysisSVDEfficiencyModule, DQMHistAnalysisSVDGeneralModule, DQMHistAnalysisSVDOccupancyModule, DQMHistAnalysisSVDOnMiraBelleModule, DQMHistAnalysisSVDUnpackerModule, DQMHistAnalysisTOPModule, DQMHistAnalysisTrackingAbortModule, DQMHistAnalysisTrackingHLTModule, DQMHistAnalysisTRGECLModule, DQMHistAnalysisTRGGDLModule, DQMHistAnalysisTRGModule, DQMHistAutoCanvasModule, DQMHistComparitorModule, DQMHistDeltaHistoModule, DQMHistInjectionModule, DqmHistoManagerModule, DQMHistoModuleBase, DQMHistOutputToEPICSModule, DQMHistReferenceModule, DQMHistSnapshotsModule, Ds2RawFileModule, Ds2RawModule, Ds2RbufModule, Ds2SampleModule, ECLDQMInjectionModule, ECLLOMModule, ECLBackgroundModule, EclBackgroundStudyModule, ECLChargedPIDDataAnalysisModule, ECLChargedPIDDataAnalysisValidationModule, ECLChargedPIDModule, ECLChargedPIDMVAModule, ECLClusterPSDModule, ECLCovarianceMatrixModule, ECLCRFinderModule, ECLDataAnalysisModule, ECLDigitCalibratorModule, ECLDigitizerModule, ECLDigitizerPureCsIModule, EclDisplayModule, ECLDQMConnectedRegionsModule, ECLDQMEXTENDEDModule, ECLDQMModule, ECLDQMOutOfTimeDigitsModule, ECLExpertModule, ECLFinalizerModule, ECLHitDebugModule, ECLLocalMaximumFinderModule, ECLLocalRunCalibratorModule, ECLPackerModule, ECLShowerCorrectorModule, ECLShowerShapeModule, ECLSplitterN1Module, ECLSplitterN2Module, ECLUnpackerModule, ECLWaveformFitModule, EffPlotsModule, EKLMDataCheckerModule, ElapsedTimeModule, EnergyBiasCorrectionModule, EventInfoPrinterModule, EventLimiterModule, EventsOfDoomBusterModule, EventT0DQMModule, EventT0ValidationModule, EvReductionModule, EvtGenDecayModule, EvtGenInputModule, ExportGeometryModule, ExtModule, FANGSDigitizerModule, FANGSModule, FANGSStudyModule, FastRbuf2DsModule, FlippedRecoTracksMergerModule, FlipQualityModule, FullSimModule, TRGGDLUnpackerModule, GearboxModule, GenRawSendModule, GetEventFromSocketModule, GRLNeuroModule, He3DigitizerModule, He3tubeModule, He3tubeStudyModule, HistoManagerModule, HistoModule, HitXPModule, HLTDQM2ZMQModule, HLTPrefilterModule, IoVDependentConditionModule, IPDQMModule, KinkFinderModule, KKGenInputModule, KLMClusterAnaModule, KLMClusterEfficiencyModule, KLMClustersReconstructorModule, KLMDigitizerModule, KLMDigitTimeShifterModule, KLMDQM2Module, KLMDQMModule, KLMExpertModule, KLMMuonIDDNNExpertModule, KLMPackerModule, KLMReconstructorModule, KLMScintillatorSimulatorModule, KLMTrackingModule, KLMTriggerModule, KLMUnpackerModule, KlongValidationModule, LowEnergyPi0IdentificationExpertModule, LowEnergyPi0VetoExpertModule, MaterialScanModule, MCMatcherTRGECLModule, MCTrackCandClassifierModule, MCV0MatcherModule, MicrotpcModule, MicrotpcStudyModule, TpcDigitizerModule, MonitorDataModule, MuidModule, MVAExpertModule, MVAMultipleExpertsModule, MVAPrototypeModule, NtuplePhase1_v6Module, OverrideGenerationFlagsModule, PartialSeqRootReaderModule, ParticleVertexFitterModule, Ph1bpipeModule, Ph1sustrModule, PhotonEfficiencySystematicsModule, PhysicsObjectsDQMModule, PhysicsObjectsMiraBelleBhabhaModule, PhysicsObjectsMiraBelleDst2Module, PhysicsObjectsMiraBelleDstModule, PhysicsObjectsMiraBelleEcmsBBModule, PhysicsObjectsMiraBelleHadronModule, PhysicsObjectsMiraBelleModule, PinDigitizerModule, PindiodeModule, PindiodeStudyModule, PlumeDigitizerModule, PlumeModule, ProgressModule, PXDBackgroundModule, PXDBgTupleProducerModule, PXDClusterizerModule, PXDDAQDQMModule, PXDDigitizerModule, PXDGatedDHCDQMModule, PXDGatedModeDQMModule, PXDInjectionDQMModule, PXDMCBgTupleProducerModule, PXDPackerModule, PXDRawDQMChipsModule, PXDRawDQMModule, PXDROIDQMModule, PXDUnpackerModule, PXDclusterFilterModule, PXDClustersFromTracksModule, PXDdigiFilterModule, PXDDQMClustersModule, PXDDQMCorrModule, PXDDQMEfficiencyModule, PXDDQMEfficiencySelftrackModule, PXDDQMExpressRecoModule, PXDPerformanceModule, PXDRawDQMCorrModule, PXDROIFinderAnalysisModule, PXDROIFinderModule, PXDTrackClusterDQMModule, PyModule, QcsmonitorDigitizerModule, QcsmonitorModule, QcsmonitorStudyModule, QualityEstimatorVXDModule, RandomBarrierModule, Raw2DsModule, RawInputModule, Rbuf2DsModule, Rbuf2RbufModule, ReceiveEventModule, RecoTracksReverterModule, ReprocessorModule, RxModule, RxSocketModule, SecMapTrainerBaseModule, SecMapTrainerVXDTFModule, SectorMapBootstrapModule, SegmentNetworkProducerModule, SeqRootInputModule, SeqRootMergerModule, SeqRootOutputModule, SerializerModule, SoftwareTriggerHLTDQMModule, SoftwareTriggerModule, StatisticsTimingHLTDQMModule, SPTCmomentumSeedRetrieverModule, SPTCvirtualIPRemoverModule, SrsensorModule, StatisticsSummaryModule, StorageDeserializerModule, StorageSerializerModule, SubEventModule, SVD3SamplesEmulatorModule, SVDBackgroundModule, SVDClusterizerModule, SVDDigitizerModule, SVDDQMDoseModule, SVDDQMInjectionModule, SVDMissingAPVsClusterCreatorModule, SVDPackerModule, SVDRecoDigitCreatorModule, SVDUnpackerModule, SVDB4CommissioningPlotsModule, SVDClusterCalibrationsMonitorModule, SVDClusterEvaluationModule, SVDClusterEvaluationTrueInfoModule, SVDClusterFilterModule, SVDCoGTimeEstimatorModule, SVDDataFormatCheckModule, SVDDQMClustersOnTrackModule, SVDDQMExpressRecoModule, SVDDQMHitTimeModule, svdDumpModule, SVDEventInfoSetterModule, SVDEventT0EstimatorModule, SVDHotStripFinderModule, SVDLatencyCalibrationModule, SVDLocalCalibrationsCheckModule, SVDLocalCalibrationsMonitorModule, SVDMaxStripTTreeModule, SVDOccupancyAnalysisModule, SVDPerformanceModule, SVDPerformanceTTreeModule, SVDPositionErrorScaleFactorImporterModule, SVDROIFinderAnalysisModule, SVDROIFinderModule, SVDShaperDigitsFromTracksModule, SVDSpacePointCreatorModule, SVDTimeCalibrationsMonitorModule, SVDTimeGroupingModule, SVDTriggerQualityGeneratorModule, SVDUnpackerDQMModule, SwitchDataStoreModule, TagVertexModule, TOPBackgroundModule, TOPBunchFinderModule, TOPChannelMaskerModule, TOPChannelT0MCModule, TOPDigitizerModule, TOPDoublePulseGeneratorModule, TOPDQMModule, TOPGainEfficiencyCalculatorModule, TOPInterimFENtupleModule, TOPLaserCalibratorModule, TOPLaserHitSelectorModule, TOPMCTrackMakerModule, TOPModuleT0CalibratorModule, TOPNtupleModule, TOPPackerModule, TOPRawDigitConverterModule, TOPTBCComparatorModule, TOPTimeBaseCalibratorModule, TOPTimeRecalibratorModule, TOPTriggerDigitizerModule, TOPUnpackerModule, TOPWaveformFeatureExtractorModule, TOPXTalkChargeShareSetterModule, TrackAnaModule, TrackCreatorModule, TrackFinderMCTruthRecoTracksModule, TrackFinderVXDBasicPathFinderModule, TrackFinderVXDCellOMatModule, TrackFinderVXDAnalizerModule, TrackingAbortDQMModule, TrackingEnergyLossCorrectionModule, TrackingMomentumScaleFactorsModule, TrackingPerformanceEvaluationModule, FindletModule< AFindlet >, FindletModule< AsicBackgroundLibraryCreator >, FindletModule< AxialSegmentPairCreator >, FindletModule< AxialSegmentPairCreator >, FindletModule< AxialStraightTrackFinder >, FindletModule< AxialStraightTrackFinder >, FindletModule< AxialTrackCreatorMCTruth >, FindletModule< AxialTrackCreatorMCTruth >, FindletModule< AxialTrackCreatorSegmentHough >, FindletModule< AxialTrackCreatorSegmentHough >, FindletModule< AxialTrackFinderHough >, FindletModule< AxialTrackFinderHough >, FindletModule< AxialTrackFinderLegendre >, FindletModule< AxialTrackFinderLegendre >, FindletModule< CDCHitsRemover >, FindletModule< CDCTrackingEventLevelMdstInfoFillerFromHitsFindlet >, FindletModule< CDCTrackingEventLevelMdstInfoFillerFromSegmentsFindlet >, FindletModule< CKFToCDCFindlet >, FindletModule< CKFToCDCFromEclFindlet >, FindletModule< CKFToPXDFindlet >, FindletModule< CKFToSVDFindlet >, FindletModule< CKFToSVDSeedFindlet >, FindletModule< ClusterBackgroundDetector >, FindletModule< ClusterBackgroundDetector >, FindletModule< ClusterPreparer >, FindletModule< ClusterPreparer >, FindletModule< ClusterRefiner< BridgingWireHitRelationFilter > >, FindletModule< ClusterRefiner< BridgingWireHitRelationFilter > >, FindletModule< CosmicsTrackMergerFindlet >, FindletModule< DATCONFPGAFindlet >, FindletModule< FacetCreator >, FindletModule< FacetCreator >, FindletModule< HitBasedT0Extractor >, FindletModule< HitBasedT0Extractor >, FindletModule< HitReclaimer >, FindletModule< HitReclaimer >, FindletModule< MCVXDCDCTrackMergerFindlet >, FindletModule< MonopoleAxialTrackFinderLegendre >, FindletModule< MonopoleAxialTrackFinderLegendre >, FindletModule< MonopoleStereoHitFinder >, FindletModule< MonopoleStereoHitFinder >, FindletModule< MonopoleStereoHitFinderQuadratic >, FindletModule< MonopoleStereoHitFinderQuadratic >, FindletModule< SegmentCreatorFacetAutomaton >, FindletModule< SegmentCreatorFacetAutomaton >, FindletModule< SegmentCreatorMCTruth >, FindletModule< SegmentCreatorMCTruth >, FindletModule< SegmentFinderFacetAutomaton >, FindletModule< SegmentFinderFacetAutomaton >, FindletModule< SegmentFitter >, FindletModule< SegmentFitter >, FindletModule< SegmentLinker >, FindletModule< SegmentLinker >, FindletModule< SegmentOrienter >, FindletModule< SegmentOrienter >, FindletModule< SegmentPairCreator >, FindletModule< SegmentPairCreator >, FindletModule< SegmentRejecter >, FindletModule< SegmentRejecter >, FindletModule< SegmentTrackCombiner >, FindletModule< SegmentTrackCombiner >, FindletModule< SegmentTripleCreator >, FindletModule< SegmentTripleCreator >, FindletModule< StereoHitFinder >, FindletModule< StereoHitFinder >, FindletModule< SuperClusterCreator >, FindletModule< SuperClusterCreator >, FindletModule< TrackCombiner >, FindletModule< TrackCombiner >, FindletModule< TrackCreatorSegmentPairAutomaton >, FindletModule< TrackCreatorSegmentPairAutomaton >, FindletModule< TrackCreatorSegmentTripleAutomaton >, FindletModule< TrackCreatorSegmentTripleAutomaton >, FindletModule< TrackCreatorSingleSegments >, FindletModule< TrackCreatorSingleSegments >, FindletModule< TrackExporter >, FindletModule< TrackExporter >, FindletModule< TrackFinder >, FindletModule< TrackFinderAutomaton >, FindletModule< TrackFinderCosmics >, FindletModule< TrackFinderSegmentPairAutomaton >, FindletModule< TrackFinderSegmentPairAutomaton >, FindletModule< TrackFinderSegmentTripleAutomaton >, FindletModule< TrackFinderSegmentTripleAutomaton >, FindletModule< TrackFlightTimeAdjuster >, FindletModule< TrackFlightTimeAdjuster >, FindletModule< TrackingUtilities::FindletStoreArrayInput< BaseEventTimeExtractorModuleFindlet< AFindlet > > >, FindletModule< TrackingUtilities::FindletStoreArrayInput< BaseEventTimeExtractorModuleFindlet< AFindlet > > >, FindletModule< TrackLinker >, FindletModule< TrackLinker >, FindletModule< TrackOrienter >, FindletModule< TrackOrienter >, FindletModule< TrackQualityAsserter >, FindletModule< TrackQualityAsserter >, FindletModule< TrackQualityEstimator >, FindletModule< TrackQualityEstimator >, FindletModule< TrackRejecter >, FindletModule< TrackRejecter >, FindletModule< vxdHoughTracking::SVDHoughTracking >, FindletModule< WireHitBackgroundDetector >, FindletModule< WireHitBackgroundDetector >, FindletModule< WireHitCreator >, FindletModule< WireHitCreator >, FindletModule< WireHitPreparer >, FindletModule< WireHitPreparer >, TrackQETrainingDataCollectorModule, TrackQualityEstimatorMVAModule, TreeFitterModule, TRGCDCETFUnpackerModule, TRGCDCModule, TRGCDCT2DDQMModule, TRGCDCT3DConverterModule, TRGCDCT3DDQMModule, TRGCDCT3DUnpackerModule, TRGCDCTSFUnpackerModule, TRGCDCTSFDQMModule, TRGCDCTSStreamModule, TRGECLBGTCHitModule, TRGECLDQMModule, TRGECLEventTimingDQMModule, TRGECLFAMModule, TRGECLModule, TRGECLQAMModule, TRGECLRawdataAnalysisModule, TRGECLTimingCalModule, TRGECLUnpackerModule, TRGGDLDQMModule, TRGGDLDSTModule, TRGGDLModule, TRGGDLSummaryModule, TRGGRLDQMModule, TRGGRLInjectionVetoFromOverlayModule, TRGGRLMatchModule, TRGGRLModule, TRGGRLProjectsModule, TRGGRLUnpackerModule, TRGRAWDATAModule, TRGTOPDQMModule, TRGTOPTRD2TTSConverterModule, TRGTOPUnpackerModule, TRGTOPUnpackerWaveformModule, TRGTOPWaveformPlotterModule, TTDDQMModule, TxModule, TxSocketModule, V0findingPerformanceEvaluationModule, V0ObjectsDQMModule, VXDMisalignmentModule, vxdDigitMaskingModule, VXDDQMExpressRecoModule, VXDQETrainingDataCollectorModule, VXDQualityEstimatorMVAModule, VXDSimpleClusterizerModule, and VXDTFTrainingDataCollectorModule.

Definition at line 146 of file Module.h.

|

overridevirtualinherited |

Create an independent copy of this module.

Note that parameters are shared, so changing them on a cloned module will also affect the original module.

Implements PathElement.

Definition at line 179 of file Module.cc.

|

inlineprotectedvirtualinherited |

Wrapper method for the virtual function beginRun() that has the implementation to be used in a call from Python.

Reimplemented in PyModule.

Definition at line 425 of file Module.h.

|

inlineprotectedvirtualinherited |

|

inlineprotectedvirtualinherited |

|

inlineprotectedvirtualinherited |

Wrappers to make the methods without "def_" prefix callable from Python.

Overridden in PyModule. Wrapper method for the virtual function initialize() that has the implementation to be used in a call from Python.

Reimplemented in PyModule.

Definition at line 419 of file Module.h.

|

inlineprotectedvirtualinherited |

Wrapper method for the virtual function terminate() that has the implementation to be used in a call from Python.

Reimplemented in PyModule.

Definition at line 444 of file Module.h.

|

inlinevirtualinherited |

This method is called if the current run ends.

Use this method to store information, which should be aggregated over one run.

This method can be implemented by subclasses.

Reimplemented in AlignDQMModule, AnalysisPhase1StudyModule, arichBtestModule, ARICHDQMModule, AWESOMEBasicModule, B2BIIConvertMdstModule, B2BIIMCParticlesMonitorModule, B2BIIMdstInputModule, BeamabortModule, BeamabortStudyModule, BeamDigitizerModule, BeamBkgGeneratorModule, BeamBkgHitRateMonitorModule, BeamBkgMixerModule, BeamBkgNeutronModule, BeamBkgTagSetterModule, BelleMCOutputModule, BgoDigitizerModule, BgoModule, BgoStudyModule, BGOverlayInputModule, BKLMAnaModule, BKLMDigitAnalyzerModule, BKLMSimHistogrammerModule, BKLMTrackingModule, CalibrationCollectorModule, CaveModule, CDCCosmicAnalysisModule, CDCCRTestModule, CDCPackerModule, CDCRecoTrackFilterModule, CDCUnpackerModule, CDCDedxDQMModule, CDCDedxValidationModule, cdcDQM7Module, CDCDQMModule, CDCTriggerNDFinderModule, CDCTriggerNeuroDQMModule, CDCTriggerNeuroDQMOnlineModule, CertifyParallelModule, ClawDigitizerModule, ClawModule, ClawStudyModule, ClawsDigitizerModule, CLAWSModule, ClawsStudyModule, Convert2RawDetModule, CosmicsAlignmentValidationModule, CsiDigitizer_v2Module, CsIDigitizerModule, CsiModule, CsiStudy_v2Module, CsIStudyModule, CurlTaggerModule, DAQPerfModule, DataWriterModule, DeSerializerPXDModule, DosiDigitizerModule, DosiModule, DosiStudyModule, DQMHistAnalysisARICHModule, DQMHistAnalysisARICHMonObjModule, DQMHistAnalysisCDCDedxModule, DQMHistAnalysisCDCEpicsModule, DQMHistAnalysisCDCMonObjModule, DQMHistAnalysisDAQMonObjModule, DQMHistAnalysisDeltaEpicsMonObjExampleModule, DQMHistAnalysisDeltaTestModule, DQMHistAnalysisECLConnectedRegionsModule, DQMHistAnalysisECLModule, DQMHistAnalysisECLOutOfTimeDigitsModule, DQMHistAnalysisECLShapersModule, DQMHistAnalysisECLSummaryModule, DQMHistAnalysisEcmsMonObjModule, DQMHistAnalysisEpicsExampleModule, DQMHistAnalysisEpicsOutputModule, DQMHistAnalysisEventT0TriggerJitterModule, DQMHistAnalysisExampleFlagsModule, DQMHistAnalysisExampleModule, DQMHistAnalysisHLTMonObjModule, DQMHistAnalysisInput2Module, DQMHistAnalysisInputPVSrvModule, DQMHistAnalysisInputTestModule, DQMHistAnalysisKLM2Module, DQMHistAnalysisKLMModule, DQMHistAnalysisKLMMonObjModule, DQMHistAnalysisMiraBelleModule, DQMHistAnalysisMonObjModule, DQMHistAnalysisOutputFileModule, DQMHistAnalysisOutputMonObjModule, DQMHistAnalysisOutputRelayMsgModule, DQMHistAnalysisPhysicsModule, DQMHistAnalysisPXDChargeModule, DQMHistAnalysisPXDFitsModule, DQMHistAnalysisPXDTrackChargeModule, DQMHistAnalysisRooFitExampleModule, DQMHistAnalysisSVDClustersOnTrackModule, DQMHistAnalysisSVDDoseModule, DQMHistAnalysisSVDEfficiencyModule, DQMHistAnalysisSVDGeneralModule, DQMHistAnalysisSVDOccupancyModule, DQMHistAnalysisSVDOnMiraBelleModule, DQMHistAnalysisSVDUnpackerModule, DQMHistAnalysisTOPModule, DQMHistAnalysisTRGECLModule, DQMHistAnalysisTRGEFFModule, DQMHistAnalysisTRGGDLModule, DQMHistAnalysisTRGModule, DQMHistComparitorModule, DQMHistDeltaHistoModule, DqmHistoManagerModule, DQMHistOutputToEPICSModule, DQMHistReferenceModule, DQMHistSnapshotsModule, Ds2RawFileModule, Ds2RawModule, Ds2RbufModule, Ds2SampleModule, ECLLOMModule, ECLBackgroundModule, EclBackgroundStudyModule, ECLChargedPIDDataAnalysisModule, ECLChargedPIDDataAnalysisValidationModule, ECLChargedPIDModule, ECLClusterPSDModule, ECLCovarianceMatrixModule, ECLCRFinderModule, ECLDataAnalysisModule, ECLDigitCalibratorModule, ECLDigitizerModule, ECLDigitizerPureCsIModule, EclDisplayModule, ECLDQMEXTENDEDModule, ECLDQMModule, ECLFinalizerModule, ECLHitDebugModule, ECLLocalMaximumFinderModule, ECLLocalRunCalibratorModule, ECLPackerModule, ECLShowerCorrectorModule, ECLShowerShapeModule, ECLSplitterN1Module, ECLSplitterN2Module, ECLUnpackerModule, ECLWaveformFitModule, EffPlotsModule, EKLMDataCheckerModule, ElapsedTimeModule, EventInfoPrinterModule, EventT0ValidationModule, EvReductionModule, EvtGenDecayModule, ExtModule, FANGSDigitizerModule, FANGSModule, FANGSStudyModule, FastRbuf2DsModule, FullSimModule, TRGGDLUnpackerModule, GenfitVisModule, GenRawSendModule, GetEventFromSocketModule, He3DigitizerModule, He3tubeModule, He3tubeStudyModule, HistoManagerModule, HistoModule, HitXPModule, HLTDQM2ZMQModule, HLTDs2ZMQModule, KLMClusterEfficiencyModule, KLMClustersReconstructorModule, KLMDigitizerModule, KLMDQM2Module, KLMDQMModule, KLMMuonIDDNNExpertModule, KLMPackerModule, KLMReconstructorModule, KLMScintillatorSimulatorModule, KLMTrackingModule, KLMTriggerModule, KLMUnpackerModule, KlongValidationModule, LowEnergyPi0IdentificationExpertModule, LowEnergyPi0VetoExpertModule, MCMatcherTRGECLModule, MCTrackCandClassifierModule, MCV0MatcherModule, MicrotpcModule, MicrotpcStudyModule, TpcDigitizerModule, TPCStudyModule, MonitorDataModule, MuidModule, NoKickCutsEvalModule, NtuplePhase1_v6Module, OverrideGenerationFlagsModule, PartialSeqRootReaderModule, Ph1bpipeModule, Ph1sustrModule, PhysicsObjectsDQMModule, PhysicsObjectsMiraBelleBhabhaModule, PhysicsObjectsMiraBelleDst2Module, PhysicsObjectsMiraBelleDstModule, PhysicsObjectsMiraBelleHadronModule, PhysicsObjectsMiraBelleModule, PinDigitizerModule, PindiodeModule, PindiodeStudyModule, PlumeDigitizerModule, PlumeModule, PrintDataModule, PrintEventRateModule, PXDBackgroundModule, PXDClustersFromTracksModule, PXDPerformanceModule, PXDROIFinderModule, PyModule, QcsmonitorDigitizerModule, QcsmonitorModule, QcsmonitorStudyModule, RandomBarrierModule, Raw2DsModule, RawInputModule, Rbuf2DsModule, Rbuf2RbufModule, ReceiveEventModule, ReprocessorModule, Root2BinaryModule, Root2RawModule, RT2SPTCConverterModule, RxModule, RxSocketModule, SecMapTrainerBaseModule, SecMapTrainerVXDTFModule, SectorMapBootstrapModule, SeqRootInputModule, SeqRootMergerModule, SeqRootOutputModule, SerializerModule, SPTCmomentumSeedRetrieverModule, SPTCvirtualIPRemoverModule, SrsensorModule, StatisticsSummaryModule, StorageDeserializerModule, StorageRootOutputModule, StorageSerializerModule, SubEventModule, SVD3SamplesEmulatorModule, SVDBackgroundModule, SVDClusterizerModule, SVDPackerModule, SVDRecoDigitCreatorModule, SVDUnpackerModule, SVDB4CommissioningPlotsModule, SVDClusterCalibrationsMonitorModule, SVDClusterEvaluationModule, SVDClusterEvaluationTrueInfoModule, SVDClusterFilterModule, SVDCoGTimeEstimatorModule, SVDDataFormatCheckModule, svdDumpModule, SVDHotStripFinderModule, SVDLatencyCalibrationModule, SVDLocalCalibrationsMonitorModule, SVDOccupancyAnalysisModule, SVDPerformanceModule, SVDPositionErrorScaleFactorImporterModule, SVDROIDQMModule, SVDROIFinderAnalysisModule, SVDROIFinderModule, SVDShaperDigitsFromTracksModule, SVDTimeCalibrationsMonitorModule, SVDTriggerQualityGeneratorModule, SVDUnpackerDQMModule, SwitchDataStoreModule, TOPBackgroundModule, TOPChannelT0MCModule, TOPDoublePulseGeneratorModule, TOPGainEfficiencyCalculatorModule, TOPInterimFENtupleModule, TOPLaserCalibratorModule, TOPLaserHitSelectorModule, TOPMCTrackMakerModule, TOPNtupleModule, TOPPackerModule, TOPRawDigitConverterModule, TOPTBCComparatorModule, TOPTimeBaseCalibratorModule, TOPTriggerDigitizerModule, TOPUnpackerModule, TOPWaveformFeatureExtractorModule, TOPWaveformQualityPlotterModule, TOPXTalkChargeShareSetterModule, TrackAnaModule, TrackFinderMCTruthRecoTracksModule, TrackFinderVXDAnalizerModule, TrackingPerformanceEvaluationModule, FindletModule< AFindlet >, FindletModule< AsicBackgroundLibraryCreator >, FindletModule< AxialSegmentPairCreator >, FindletModule< AxialSegmentPairCreator >, FindletModule< AxialStraightTrackFinder >, FindletModule< AxialStraightTrackFinder >, FindletModule< AxialTrackCreatorMCTruth >, FindletModule< AxialTrackCreatorMCTruth >, FindletModule< AxialTrackCreatorSegmentHough >, FindletModule< AxialTrackCreatorSegmentHough >, FindletModule< AxialTrackFinderHough >, FindletModule< AxialTrackFinderHough >, FindletModule< AxialTrackFinderLegendre >, FindletModule< AxialTrackFinderLegendre >, FindletModule< CDCHitsRemover >, FindletModule< CDCTrackingEventLevelMdstInfoFillerFromHitsFindlet >, FindletModule< CDCTrackingEventLevelMdstInfoFillerFromSegmentsFindlet >, FindletModule< CKFToCDCFindlet >, FindletModule< CKFToCDCFromEclFindlet >, FindletModule< CKFToPXDFindlet >, FindletModule< CKFToSVDFindlet >, FindletModule< CKFToSVDSeedFindlet >, FindletModule< ClusterBackgroundDetector >, FindletModule< ClusterBackgroundDetector >, FindletModule< ClusterPreparer >, FindletModule< ClusterPreparer >, FindletModule< ClusterRefiner< BridgingWireHitRelationFilter > >, FindletModule< ClusterRefiner< BridgingWireHitRelationFilter > >, FindletModule< CosmicsTrackMergerFindlet >, FindletModule< DATCONFPGAFindlet >, FindletModule< FacetCreator >, FindletModule< FacetCreator >, FindletModule< HitBasedT0Extractor >, FindletModule< HitBasedT0Extractor >, FindletModule< HitReclaimer >, FindletModule< HitReclaimer >, FindletModule< MCVXDCDCTrackMergerFindlet >, FindletModule< MonopoleAxialTrackFinderLegendre >, FindletModule< MonopoleAxialTrackFinderLegendre >, FindletModule< MonopoleStereoHitFinder >, FindletModule< MonopoleStereoHitFinder >, FindletModule< MonopoleStereoHitFinderQuadratic >, FindletModule< MonopoleStereoHitFinderQuadratic >, FindletModule< SegmentCreatorFacetAutomaton >, FindletModule< SegmentCreatorFacetAutomaton >, FindletModule< SegmentCreatorMCTruth >, FindletModule< SegmentCreatorMCTruth >, FindletModule< SegmentFinderFacetAutomaton >, FindletModule< SegmentFinderFacetAutomaton >, FindletModule< SegmentFitter >, FindletModule< SegmentFitter >, FindletModule< SegmentLinker >, FindletModule< SegmentLinker >, FindletModule< SegmentOrienter >, FindletModule< SegmentOrienter >, FindletModule< SegmentPairCreator >, FindletModule< SegmentPairCreator >, FindletModule< SegmentRejecter >, FindletModule< SegmentRejecter >, FindletModule< SegmentTrackCombiner >, FindletModule< SegmentTrackCombiner >, FindletModule< SegmentTripleCreator >, FindletModule< SegmentTripleCreator >, FindletModule< StereoHitFinder >, FindletModule< StereoHitFinder >, FindletModule< SuperClusterCreator >, FindletModule< SuperClusterCreator >, FindletModule< TrackCombiner >, FindletModule< TrackCombiner >, FindletModule< TrackCreatorSegmentPairAutomaton >, FindletModule< TrackCreatorSegmentPairAutomaton >, FindletModule< TrackCreatorSegmentTripleAutomaton >, FindletModule< TrackCreatorSegmentTripleAutomaton >, FindletModule< TrackCreatorSingleSegments >, FindletModule< TrackCreatorSingleSegments >, FindletModule< TrackExporter >, FindletModule< TrackExporter >, FindletModule< TrackFinder >, FindletModule< TrackFinderAutomaton >, FindletModule< TrackFinderCosmics >, FindletModule< TrackFinderSegmentPairAutomaton >, FindletModule< TrackFinderSegmentPairAutomaton >, FindletModule< TrackFinderSegmentTripleAutomaton >, FindletModule< TrackFinderSegmentTripleAutomaton >, FindletModule< TrackFlightTimeAdjuster >, FindletModule< TrackFlightTimeAdjuster >, FindletModule< TrackingUtilities::FindletStoreArrayInput< BaseEventTimeExtractorModuleFindlet< AFindlet > > >, FindletModule< TrackingUtilities::FindletStoreArrayInput< BaseEventTimeExtractorModuleFindlet< AFindlet > > >, FindletModule< TrackLinker >, FindletModule< TrackLinker >, FindletModule< TrackOrienter >, FindletModule< TrackOrienter >, FindletModule< TrackQualityAsserter >, FindletModule< TrackQualityAsserter >, FindletModule< TrackQualityEstimator >, FindletModule< TrackQualityEstimator >, FindletModule< TrackRejecter >, FindletModule< TrackRejecter >, FindletModule< vxdHoughTracking::SVDHoughTracking >, FindletModule< WireHitBackgroundDetector >, FindletModule< WireHitBackgroundDetector >, FindletModule< WireHitCreator >, FindletModule< WireHitCreator >, FindletModule< WireHitPreparer >, FindletModule< WireHitPreparer >, TrackSetEvaluatorHopfieldNNDEVModule, TRGCDCETFUnpackerModule, TRGCDCModule, TRGCDCT2DDQMModule, TRGCDCT3DConverterModule, TRGCDCT3DDQMModule, TRGCDCT3DUnpackerModule, TRGCDCTSFUnpackerModule, TRGCDCTSFDQMModule, TRGCDCTSStreamModule, TRGECLBGTCHitModule, TRGECLDQMModule, TRGECLFAMModule, TRGECLModule, TRGECLQAMModule, TRGECLRawdataAnalysisModule, TRGECLTimingCalModule, TRGECLUnpackerModule, TRGGDLDQMModule, TRGGDLDSTModule, TRGGDLModule, TRGGDLSummaryModule, TRGGRLDQMModule, TRGGRLMatchModule, TRGGRLModule, TRGGRLProjectsModule, TRGGRLUnpackerModule, TRGRAWDATAModule, TRGTOPDQMModule, TRGTOPTRD2TTSConverterModule, TRGTOPUnpackerModule, TRGTOPUnpackerWaveformModule, TRGTOPWaveformPlotterModule, TxModule, TxSocketModule, V0findingPerformanceEvaluationModule, vxdDigitMaskingModule, VXDSimpleClusterizerModule, VXDTFTrainingDataCollectorModule, ZMQTxInputModule, and ZMQTxWorkerModule.

Definition at line 165 of file Module.h.

|

inherited |

If at least one condition was set, it is evaluated and true returned if at least one condition returns true.

If no condition or result value was defined, the method returns false. Otherwise, the condition is evaluated and true returned, if at least one condition returns true. To speed up the evaluation, the condition strings were already parsed in the method if_value().

Definition at line 96 of file Module.cc.

|

overridevirtual |

Called once for each event.

Prepare input and target for each track and store it.

Reimplemented from Module.

Definition at line 233 of file GRLNeuroTrainerModule.cc.

|

staticinherited |

Exposes methods of the Module class to Python.

Definition at line 325 of file Module.cc.

|

inherited |

What to do after the conditional path is finished.

(defaults to c_End if no condition is set)

Definition at line 133 of file Module.cc.

|

inherited |

|

inlineinherited |

|

inlineinherited |

|

inherited |

Returns the path of the last true condition (if there is at least one, else reaturn a null pointer).

Definition at line 113 of file Module.cc.

|

inlineinherited |

|

inlinevirtualinherited |

Return a list of output filenames for this modules.

This will be called when basf2 is run with "--dry-run" if the module has set either the c_Input or c_Output properties.

If the parameter outputFiles is false (for modules with c_Input) the list of input filenames should be returned (if any). If outputFiles is true (for modules with c_Output) the list of output files should be returned (if any).

If a module has sat both properties this member is called twice, once for each property.

The module should return the actual list of requested input or produced output filenames (including handling of input/output overrides) so that the grid system can handle input/output files correctly.

This function should return the same value when called multiple times. This is especially important when taking the input/output overrides from Environment as they get consumed when obtained so the finalized list of output files should be stored for subsequent calls.

Reimplemented in RootInputModule, RootOutputModule, and StorageRootOutputModule.

Definition at line 133 of file Module.h.

|

inlineinherited |

|

inlineoverrideprivatevirtualinherited |

no submodules, return empty list

Implements PathElement.

Definition at line 505 of file Module.h.

|

inlineinherited |

Returns the name of the module.

This can be changed via e.g. set_name() in the steering file to give more useful names if there is more than one module of the same type.

For identifying the type of a module, using getType() (or type() in Python) is recommended.

Definition at line 186 of file Module.h.

|

inlineinherited |

|

inherited |

Returns a python list of all parameters.

Each item in the list consists of the name of the parameter, a string describing its type, a python list of all default values and the description of the parameter.

Definition at line 279 of file Module.cc.

|

inlineinherited |

|

overrideprivatevirtualinherited |

return the module name.

Implements PathElement.

Definition at line 192 of file Module.cc.

|

inlineinherited |

Return the return value set by this module.

This value is only meaningful if hasReturnValue() is true

Definition at line 380 of file Module.h.

|

inherited |

Returns the type of the module (i.e.

class name minus 'Module')

|

inlineinherited |

|

inherited |

Returns true if all specified property flags are available in this module.

| propertyFlags | Ored EModulePropFlags which should be compared with the module flags. |

|

inlineinherited |

|

inherited |

Returns true and prints error message if the module has unset parameters which the user has to set in the steering file.

Definition at line 166 of file Module.cc.

|

inherited |

A simplified version to add a condition to the module.

Please note that successive calls of this function will add more than one condition to the module. If more than one condition results in true, only the last of them will be used.

Please be careful: Avoid creating cyclic paths, e.g. by linking a condition to a path which is processed before the path where this module is located in.

It is equivalent to the if_value() method, using the expression "<1". This method is meant to be used together with the setReturnValue(bool value) method.

| path | Shared pointer to the Path which will be executed if the return value is false. |

| afterConditionPath | What to do after executing 'path'. |

|

inherited |

A simplified version to set the condition of the module.

Please note that successive calls of this function will add more than one condition to the module. If more than one condition results in true, only the last of them will be used.

Please be careful: Avoid creating cyclic paths, e.g. by linking a condition to a path which is processed before the path where this module is located in.

It is equivalent to the if_value() method, using the expression ">=1". This method is meant to be used together with the setReturnValue(bool value) method.

| path | Shared pointer to the Path which will be executed if the return value is true. |

| afterConditionPath | What to do after executing 'path'. |

|

inherited |

Add a condition to the module.

Please note that successive calls of this function will add more than one condition to the module. If more than one condition results in true, only the last of them will be used.

See https://xwiki.desy.de/xwiki/rest/p/a94f2 or ModuleCondition for a description of the syntax.

Please be careful: Avoid creating cyclic paths, e.g. by linking a condition to a path which is processed before the path where this module is located in.

| expression | The expression of the condition. |

| path | Shared pointer to the Path which will be executed if the condition is evaluated to true. |

| afterConditionPath | What to do after executing 'path'. |

|

overridevirtual |

Initialize the module.

Initialize the networks and register datastore objects.

Reimplemented from Module.

Definition at line 141 of file GRLNeuroTrainerModule.cc.

| bool loadTraindata | ( | const std::string & | filename, |

| const std::string & | arrayname = "trainSets" ) |

Load saved training samples.

| filename | name of the TFile to read from |

| arrayname | name of the TObjArray holding the training samples in the file |

| void saveTraindata | ( | const std::string & | filename, |

| const std::string & | arrayname = "trainSets" ) |

Save all training samples.

| filename | name of the TFile to write to |

| arrayname | name of the TObjArray holding the training samples in the file |

Definition at line 653 of file GRLNeuroTrainerModule.cc.

|

inherited |

Configure the abort log level.

Definition at line 67 of file Module.cc.

|

inherited |

Configure the debug messaging level.

Definition at line 61 of file Module.cc.

|

protectedinherited |

Sets the description of the module.

| description | A description of the module. |

Definition at line 214 of file Module.cc.

|

inlineinherited |

|

inherited |

Configure the printed log information for the given level.

| logLevel | The log level (one of LogConfig::ELogLevel) |

| logInfo | What kind of info should be printed? ORed combination of LogConfig::ELogInfo flags. |

Definition at line 73 of file Module.cc.

|

inherited |

Configure the log level.

|

inlineinherited |

|

inlineprotectedinherited |

|

privateinherited |

Implements a method for setting boost::python objects.

The method supports the following types: list, dict, int, double, string, bool The conversion of the python object to the C++ type and the final storage of the parameter value is done in the ModuleParam class.

| name | The unique name of the parameter. |

| pyObj | The object which should be converted and stored as the parameter value. |

Definition at line 234 of file Module.cc.

|

privateinherited |

Implements a method for reading the parameter values from a boost::python dictionary.

The key of the dictionary has to be the name of the parameter and the value has to be of one of the supported parameter types.

| dictionary | The python dictionary from which the parameter values are read. |

Definition at line 249 of file Module.cc.

|

inherited |

Sets the flags for the module properties.

| propertyFlags | bitwise OR of EModulePropFlags |

|

protectedinherited |

Sets the return value for this module as bool.

The bool value is saved as an integer with the convention 1 meaning true and 0 meaning false. The value can be used in the steering file to divide the analysis chain into several paths.

| value | The value of the return value. |

|

protectedinherited |

Sets the return value for this module as integer.

The value can be used in the steering file to divide the analysis chain into several paths.

| value | The value of the return value. |

|

protectedinherited |

Set the module type.

Only for use by internal modules (which don't use the normal REG_MODULE mechanism).

|

overridevirtual |

Do the training for all sectors.

Reimplemented from Module.

Definition at line 492 of file GRLNeuroTrainerModule.cc.

| void train | ( | unsigned | isector | ) |

Train a single MLP.

Definition at line 519 of file GRLNeuroTrainerModule.cc.

|

protected |

Definition at line 146 of file GRLNeuroTrainerModule.h.

|

protected |

Histograms for monitoring.

Definition at line 140 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 147 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 141 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 157 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 152 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 158 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 153 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 160 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 155 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 159 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 154 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 161 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 156 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 151 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 145 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 148 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 142 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 149 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 143 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 150 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 144 of file GRLNeuroTrainerModule.h.

|

protected |

Name of the StoreArray containing the input 2D tracks.

Definition at line 75 of file GRLNeuroTrainerModule.h.

|

protected |

Name of the TObjArray holding the networks.

Definition at line 85 of file GRLNeuroTrainerModule.h.

|

protected |

Training is stopped if validation error is higher than checkInterval epochs ago, i.e.

either the validation error is increasing or the gain is less than the fluctuations.

Definition at line 111 of file GRLNeuroTrainerModule.h.

|

privateinherited |

|

privateinherited |

|

protected |

Name of file where network weights etc.

are stored after training.

Definition at line 79 of file GRLNeuroTrainerModule.h.

|

protected |

Name of the StoreObj containing the input GRL.

Definition at line 77 of file GRLNeuroTrainerModule.h.

|

protected |

Instance of the NeuroTrigger.

Definition at line 118 of file GRLNeuroTrainerModule.h.

|

privateinherited |

|

protected |

Switch to load saved parameters from a previous run.

Definition at line 91 of file GRLNeuroTrainerModule.h.

|

privateinherited |

|

protected |

Name of file where training log is stored.

Definition at line 83 of file GRLNeuroTrainerModule.h.

|

protected |

Maximal number of training epochs.

Definition at line 113 of file GRLNeuroTrainerModule.h.

|

privateinherited |

|

protected |

Switch to multiply number of samples with number of weights.

Definition at line 99 of file GRLNeuroTrainerModule.h.

|

privateinherited |

|

protected |

Number of test samples.

Definition at line 103 of file GRLNeuroTrainerModule.h.

|

protected |

Number of threads for training.

Definition at line 107 of file GRLNeuroTrainerModule.h.

|

protected |

Maximal number of training samples.

Definition at line 97 of file GRLNeuroTrainerModule.h.

|

protected |

Minimal number of training samples.

Definition at line 95 of file GRLNeuroTrainerModule.h.

|

protected |

Number of validation samples.

Definition at line 101 of file GRLNeuroTrainerModule.h.

|

privateinherited |

|

protected |

Parameters for the NeuroTrigger.

Definition at line 93 of file GRLNeuroTrainerModule.h.

|

privateinherited |

The properties of the module as bitwise or (with |) of EModulePropFlags.

|

protected |

Number of training runs with different random start weights.

Definition at line 115 of file GRLNeuroTrainerModule.h.

|

protected |

If true, save training curve and parameter distribution of training data.

Definition at line 89 of file GRLNeuroTrainerModule.h.

|

protected |

Name of the TObjArray holding the training samples.

Definition at line 87 of file GRLNeuroTrainerModule.h.

|

protected |

Name of file where training samples are stored.

Definition at line 81 of file GRLNeuroTrainerModule.h.

|

protected |

Sets of training data for all sectors.

Definition at line 120 of file GRLNeuroTrainerModule.h.

|

protected |

Name of the StoreArray containing the ECL clusters.

Definition at line 73 of file GRLNeuroTrainerModule.h.

|

privateinherited |

|

protected |

Limit for weights.

Definition at line 105 of file GRLNeuroTrainerModule.h.

|

protected |

Number of CDC sectors.

Definition at line 133 of file GRLNeuroTrainerModule.h.

|

protected |

Number of ECL sectors.

Definition at line 135 of file GRLNeuroTrainerModule.h.

|

protected |

Number of Total sectors.

Definition at line 137 of file GRLNeuroTrainerModule.h.

|

protected |

convert radian to degree

Definition at line 130 of file GRLNeuroTrainerModule.h.

|

protected |

BG scale factor for training.

Definition at line 164 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 128 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 125 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 126 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 124 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 127 of file GRLNeuroTrainerModule.h.

|

protected |

Definition at line 123 of file GRLNeuroTrainerModule.h.