7.8.5. Deep Flavor Tagger#

Authors: J. Gemmler

The Deep Flavor Tagger is a multivariate tool to estimate the flavor of \(B_{tag}\) mesons, without having to reconstruct the decays explicitly.

Principle#

Many B mesons decay in flavor specific decay modes, which offer a unique signature for their final state particles. Certain attributes, like fast leptons or slow pions can be used to infer directly to the flavor of the tag B meson.

As opposed to the category based flavor tagger, see Flavor Tagging Principle , those specific attributes are not incorporated by hand, but the representation data is directly ‘learned’ by a deep neural network, which is described in the next section.

The advantage of this method is that the algorithm is potentially susceptible to exploit a wider case of attributes of the dataset, than putting in only explicit knowledge by pre crafted features.

Furthermore this method allows a variety of domain adaption methods, which could be object of future studies.

Algorithm#

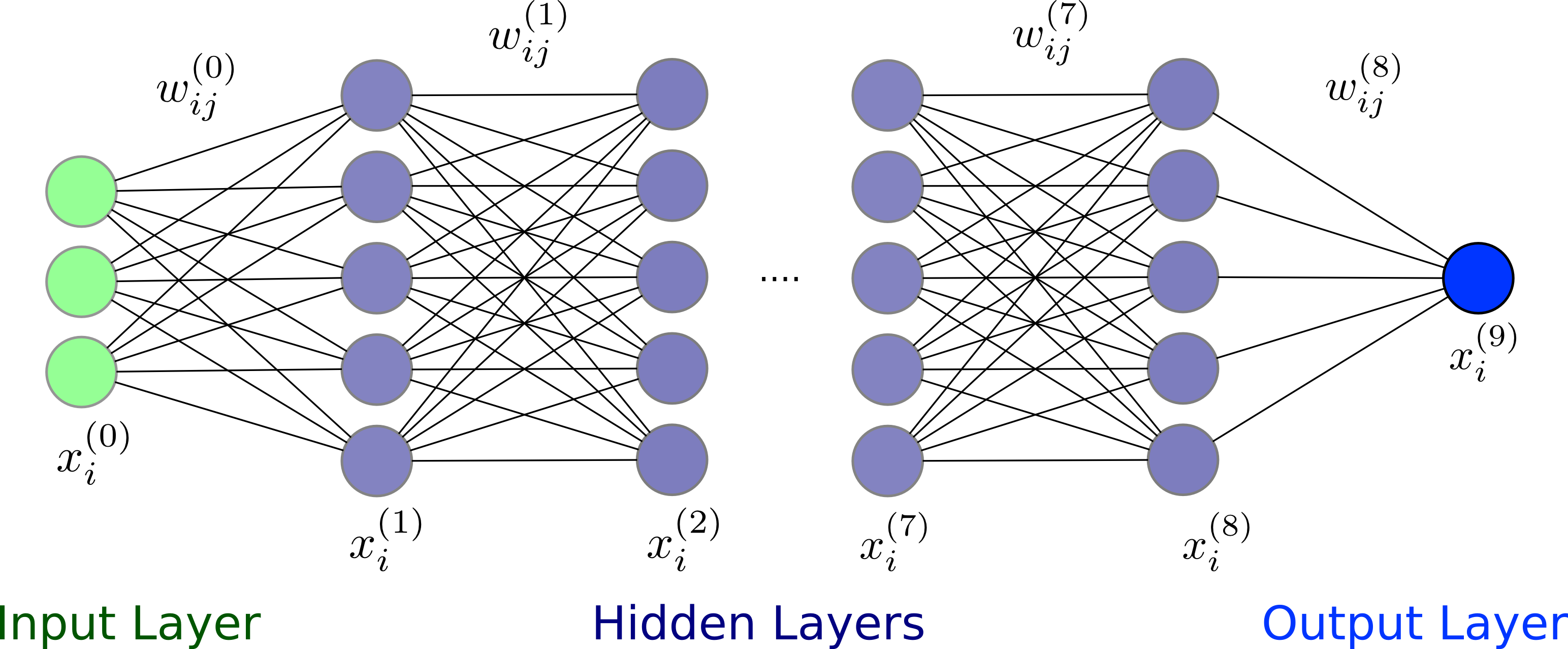

The core of the algorithm is a multilayer perceptron with 8 hidden layers. It is trained with stochastic gradient descent.

It is implemented with the tensorflow framework, which is interfaced via the MVA package.

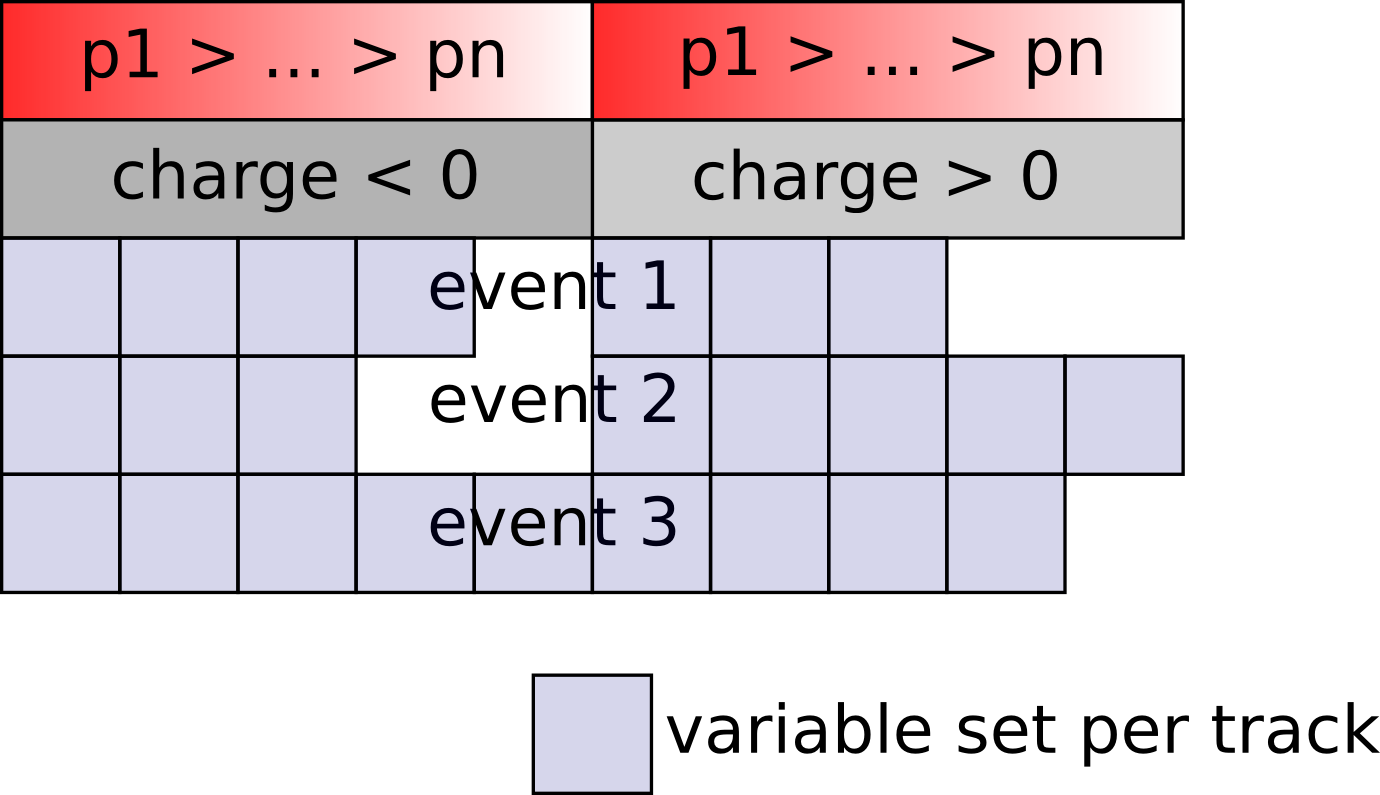

Currently, the input of the algorithm is based on tracks only, but can be extended to other objects, e.g. cluster without too many changes. The charged tracks are grouped by charged, and sorted by momentum. If an event has less then 5 positively or 5 negatively charged tracks, a specific kind of zero padding is applied. The scheme of the input parameters are shown below:

The output of the algorithm is the variable 'DNN_qrCombined', which corresponds to the tag-side \(B\) flavor \(q_{\rm DNN}\)

times the dilution factor \(r_{\rm DNN}\). The range of 'DNN_qrCombined' is \([-1, 1]\).

The output is close to \(-1\) if the tag side of an event is likely to be related to a \(\bar{B}^0\),

and close to \(1\) for a \(B^0\). The value \(0\) corresponds to a random decision.

How to use#

The usage of the Deep Flavor Tagger is pretty simple and straight forward.

In your steering file, you first have to import the interface to the Deep Flavor Tagger

from dft.DeepFlavorTagger import DeepFlavorTagger

After reconstructing your signal \(B\) meson, make sure that you build the rest of event:

ma.buildRestOfEvent('B0:sig', path=path)

To use the Deep Flavor Tagger with basic functionality on Belle II data or MC, use

DeepFlavorTagger('B0:sig',

uniqueIdentifier='FlavorTagger_Belle2_B2nunubarBGx1DNN_1',

path=path)

Note

For development purposes, the algorithm can also be used with local .xml weight files e.g.

FlavorTagger_Belle2_B2nunubarBGx1DNN_1.xml

To use on Belle data or MC with b2bii, you need a special identifier and a special set of variables:

BELLE_IDENTIFIER='FlavorTagger_Belle_B2nunubarBGx1DNN_1'

BELLE_FLAVOR_TAG_VARIABLES = [

'useCMSFrame(p)',

'useCMSFrame(cosTheta)',

'useCMSFrame(phi)',

'atcPIDBelle(3,2)',

'eIDBelle',

'muIDBelle',

'atcPIDBelle(4,2)',

'nCDCHits',

'nSVDHits',

'dz',

'dr',

'chiProb',

]

DeepFlavorTagger(..., uniqueIdentifier=BELLE_IDENTIFIER,

variable_list=BELLE_FLAVOR_TAG_VARIABLES)

Studies have shown, that we can reduce a certain kind of bias, by applying additional rest of event cuts, you can do this with the following argument. You can basically treat this like cuts in an RoE mask.

DeepFlavorTagger(..., additional_roe_filter='dr < 2 and abs(dz) < 4')

Finally, do not forget to save the output variable 'DNN_qrCombined' into your ntuples.

It is probably also helpful for MC studies, to write out the MC truth flavor of the

tag side 'mcFlavorOfOtherB'.

ma.variablesToNtuple(decayString='B0:sig',

variables=['DNN_qrCombined', 'mcFlavorOfOtherB'],

path=path)

Functions#

- dft.DeepFlavorTagger.DeepFlavorTagger(particle_lists, mode='expert', working_dir='', uniqueIdentifier='standard', variable_list=None, target='qrCombined', overwrite=False, transform_to_probability=False, signal_fraction=-1.0, classifier_args=None, train_valid_fraction=0.92, mva_steering_file='analysis/scripts/dft/tensorflow_dnn_interface.py', maskName='all', path=None)[source]#

Interfacing for the DeepFlavorTagger. This function can be used for training (

teacher), preparation of training datasets (sampler) and inference (expert).This function requires reconstructed B meson signal particle list and where an RestOfEvent is built.

- Parameters:

particle_lists – string or list[string], particle list(s) of the reconstructed signal B meson

mode – string, valid modes are

expert(default),teacher,samplerworking_dir – string, working directory for the method

uniqueIdentifier – string, database identifier for the method

variable_list – list[string], name of the basf2 variables used for discrimination

target – string, target variable

overwrite – bool, overwrite already (locally!) existing training

transform_to_probability – bool, enable a purity transformation to compensate potential over-training, can only be set during training

signal_fraction – float, (experimental) signal fraction override, transform to output to a probability if an uneven signal/background fraction is used in the training data, can only be set during training

classifier_args – dictionary, customized arguments for the mlp possible attributes of the dictionary are: lr_dec_rate: learning rate decay rate lr_init: learning rate initial value mom_init: momentum initial value min_epochs: minimal number of epochs max_epochs: maximal number of epochs stop_epochs: epochs to stop without improvements on the validation set for early stopping batch_size: batch size seed: random seed for tensorflow layers: [[layer name, activation function, input_width, output_width, init_bias, init_weights],..] wd_coeffs: weight decay coefficients, length of layers cuda_visible_devices: selection of cuda devices tensorboard_dir: addition directory for logging the training process

train_valid_fraction – float, train-valid fraction (.92). If transform to probability is enabled, train valid fraction will be split into a test set (.5)

maskName – get ROE particles from a specified ROE mask

path – basf2 path obj

- Returns:

None